格式化后错误如下

找不到或无法加载主类org.apache.hadoop.hdfs.server.namenode.NameNode

执行hadoop version错误如下

找不到或无法加载主类 org.apache.hadoop.util.VersionInfo

有没有遇到过的,大神求解决方案

jdk安装目录/home/ubuntu/jdk-1.7

hadoop安装目录/home/ubuntu/hadoop-2.4.0

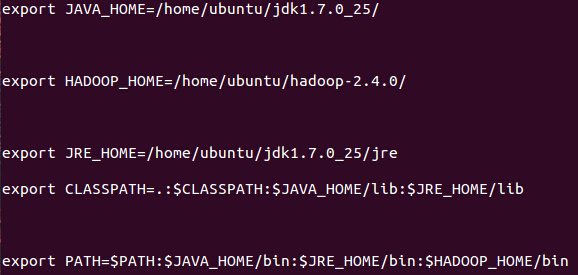

/etc/profile配置如下

hadoop-env.sh和yarn-env.sh

配置了export JAVA_HOME=/home/ubuntu/jdk-1.7

core-site.xml

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://hadoop01:9000</value>

</property>

<property>

<name>io.file.buffer.size</name>

<value>131072</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>file:/home/hadoop/temp</value>

<description>Abase for other temporary directories.</description>

</property>

<property>

<name>hadoop.proxyuser.hadoop.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.hadoop.groups</name>

<value>*</value>

</property>

</configuration>

hdfs-site.xml

<configuration>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>hadoop01:9001</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/home/ubuntu/dfs/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/home/ubuntu/dfs/data</value>

</property>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property>

</configuration>

mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>mapreduce.jobhistory.address</name>

<value>hadoop01:10020</value>

</property>

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>hadoop01:19888</value>

</property>

</configuration>

yarn-site.xml

<configuration>

<!-- Site specific YARN configuration properties -->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

<property>

<name>yarn.resourcemanager.address</name>

<value>hadoop01:8032</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address</name>

<value>hadoop01:8030</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>hadoop01:8031</value>

</property>

<property>

<name>yarn.resourcemanager.admin.address</name>

<value>hadoop01:8033</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address</name>

<value>hadoop01:8088</value>

</property>

</configuration>

发帖

发帖 与我相关

与我相关 我的任务

我的任务 分享

分享