20,848

社区成员

发帖

发帖 与我相关

与我相关 我的任务

我的任务 分享

分享package cn.itcast.hadoop.hdfs;

import java.io.FileOutputStream;

import java.io.InputStream;

import java.io.OutputStream;

import java.net.URI;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IOUtils;

public class HDFSDemo {

public static void main(String[] args) throws Exception{

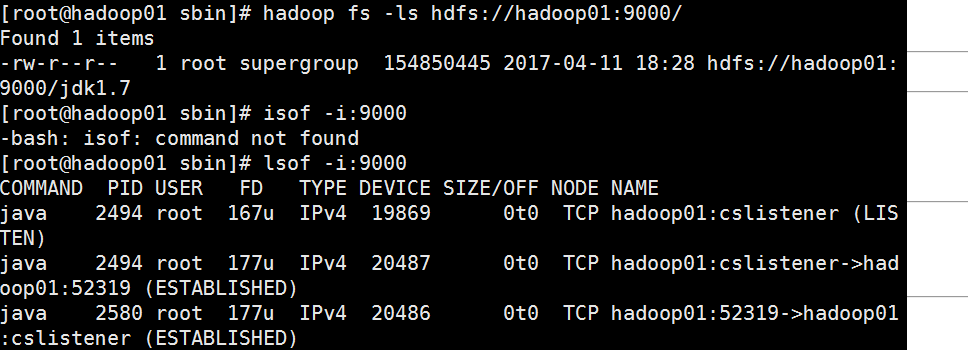

FileSystem fs = FileSystem.get(new URI("hdfs://hadoop01:9000"), new Configuration());

InputStream in = fs.open(new Path("/jdk1.7"));

OutputStream out = new FileOutputStream("d://jdk1.7");

IOUtils.copyBytes(in, out, 4096, true);

}

}

log4j:WARN No appenders could be found for logger (org.apache.hadoop.metrics2.lib.MutableMetricsFactory).

log4j:WARN Please initialize the log4j system properly.

log4j:WARN See http://logging.apache.org/log4j/1.2/faq.html#noconfig for more info.

Exception in thread "main" java.net.ConnectException: Call From USER-20160404FC/192.168.8.100 to hadoop01:9000 failed on connection exception: java.net.ConnectException: Connection refused: no further information; For more details see: http://wiki.apache.org/hadoop/ConnectionRefused

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.net.NetUtils.wrapWithMessage(NetUtils.java:783)

at org.apache.hadoop.net.NetUtils.wrapException(NetUtils.java:730)

at org.apache.hadoop.ipc.Client.call(Client.java:1351)

at org.apache.hadoop.ipc.Client.call(Client.java:1300)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:206)

at com.sun.proxy.$Proxy7.getBlockLocations(Unknown Source)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:186)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:102)

at com.sun.proxy.$Proxy7.getBlockLocations(Unknown Source)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.getBlockLocations(ClientNamenodeProtocolTranslatorPB.java:188)

at org.apache.hadoop.hdfs.DFSClient.callGetBlockLocations(DFSClient.java:1064)

at org.apache.hadoop.hdfs.DFSClient.getLocatedBlocks(DFSClient.java:1054)

at org.apache.hadoop.hdfs.DFSClient.getLocatedBlocks(DFSClient.java:1044)

at org.apache.hadoop.hdfs.DFSInputStream.fetchLocatedBlocksAndGetLastBlockLength(DFSInputStream.java:235)

at org.apache.hadoop.hdfs.DFSInputStream.openInfo(DFSInputStream.java:202)

at org.apache.hadoop.hdfs.DFSInputStream.<init>(DFSInputStream.java:195)

at org.apache.hadoop.hdfs.DFSClient.open(DFSClient.java:1212)

at org.apache.hadoop.hdfs.DistributedFileSystem$3.doCall(DistributedFileSystem.java:290)

at org.apache.hadoop.hdfs.DistributedFileSystem$3.doCall(DistributedFileSystem.java:286)

at org.apache.hadoop.fs.FileSystemLinkResolver.resolve(FileSystemLinkResolver.java:81)

at org.apache.hadoop.hdfs.DistributedFileSystem.open(DistributedFileSystem.java:286)

at org.apache.hadoop.fs.FileSystem.open(FileSystem.java:763)

at cn.itcast.hadoop.hdfs.HDFSDemo.main(HDFSDemo.java:17)

Caused by: java.net.ConnectException: Connection refused: no further information

at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method)

at sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:717)

at org.apache.hadoop.net.SocketIOWithTimeout.connect(SocketIOWithTimeout.java:206)

at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:529)

at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:493)

at org.apache.hadoop.ipc.Client$Connection.setupConnection(Client.java:547)

at org.apache.hadoop.ipc.Client$Connection.setupIOstreams(Client.java:642)

at org.apache.hadoop.ipc.Client$Connection.access$2600(Client.java:314)

at org.apache.hadoop.ipc.Client.getConnection(Client.java:1399)

at org.apache.hadoop.ipc.Client.call(Client.java:1318)

... 24 more