37,720

社区成员

发帖

发帖 与我相关

与我相关 我的任务

我的任务 分享

分享#coding:utf-8

import requests

from bs4 import BeautifulSoup

import pymysql

import re

import random

from spider.change_proxy_Test import *

import time

from multiprocessing import Pool

import functools

import os

agents = [

"Mozilla/5.0 (Linux; U; Android 2.3.6; en-us; Nexus S Build/GRK39F) AppleWebKit/533.1 (KHTML, like Gecko) Version/4.0 Mobile Safari/533.1",

]

proxyIP = ()

#自动随机挑选user-agent

def change_UserAgent_auto(url):

host = (re.compile(r'http://(.*?)/').findall(url))[0]

headers = {'Host':host,'User-Agent':random.choice(agents)}

return headers

def get_proxyIPfromDB():

#局部变量database

database = pymysql.connect(host="localhost",port=3306,user="zoe",passwd="1235789y",db="proxy_ip_db",charset="utf8")

cursor = database.cursor()

sql = 'select ip_port from proxy_ip where useful=1'

cursor.execute(sql)

global proxyIP

proxyIP = cursor.fetchall()

database.commit()

database.close()

def change_proxyIP_auto():

proxy = str(random.choice(proxyIP))[2:-3]

return {

'http': 'http://'+proxy,

'https': 'https://'+proxy,

}

def get_article(database,headers):

cursor = database.cursor()

sql = 'select a_url from article'

cursor.execute(sql)

a_urls = cursor.fetchall()

database.commit()

cursor = database.cursor()

sql = 'select title from article'

cursor.execute(sql)

titles = cursor.fetchall()

database.commit()

p = Pool(4)

for i in range(len(a_urls)):

#多进程4个,把下面这一块用装饰器包装成函数!!!!!!!!!!!!!!!!!!!

#for i in range(20):

#每一波有效ip差不多用完就更新代理ip的DB表,即重新取得10个代理ip

if i%(len(proxyIP)*30)==0:

print('@@@@@@@@@@@@@@get_article:每一波有效ip差不多用完就更新代理ip的DB表,即重新取得10个代理ip')

change_proxy1()

get_proxyIPfromDB()

def acontent_subprocess():

if i%10==0:

proxy_ip = change_proxyIP_auto()

comment = ''

a_url = str(a_urls[i])[2:-3]

title = str(titles[i])[2:-3].strip()

print(a_url)

print(title)

res = requests.get(a_url,headers=headers,proxies = proxy_ip)

#soup = BeautifulSoup(res.text,'lxml',from_encoding='utf-8')

article_id = (re.compile(r'_(.*?).htm').findall(a_url))[0]

invitations = re.compile(r'div class="invitation">[\s\S]*?<div class="itcom"[\s\S]*?举报').findall(res.text)

content = re.compile('t_f.*?>(.*?)</td>').findall(invitations[0])

if len(content)==0:

content = re.compile(r'<div id="HTML_body.*">([\s\S]*?)<br /> <br />').findall(invitations[0])[0]

content = str(content).strip()

for rubbish in re.compile(r'<[\s\S]*?>').findall(content):

#!!!!!replace只是python中字符串是immutable的对象,replace是不会直接变更字符串内容的,只会创建一个新的。需要重新引用将replace返回的替换后的字符串结果。

print(rubbish)

content = content.replace(rubbish,' ')

content = content.replace(' ','').replace(' ','').strip()

for i in range(1,len(invitations)):

cm = re.compile(r'<div id="HTML_body.*">([\s\S]*?)<br /> <br />').findall(invitations[i])[0]

if '</div>' in str(cm):

comment = comment + re.compile(r'</div>[\s\S]*?<p>([\s\S]*?)</p>').findall(str(cm))[0]

elif '</p>' in str(cm):

comment = comment + re.compile(r'<p>([\s\S]*?)</p>').findall(str(cm))[0]

else:

comment = comment + cm

###########可改进!改成用re模块的sub函数

comment = comment.strip().replace('<br />','').replace(' ','').replace(' ','').replace(' ','')

sql = 'insert into content(title,a_url,content,comment) values(%s,%s,%s,%s)'

sql_params = (title,a_url,content,comment)

saveDB(sql,sql_params)

p.apply_async(acontent_subprocess)

p.close()

p.join()

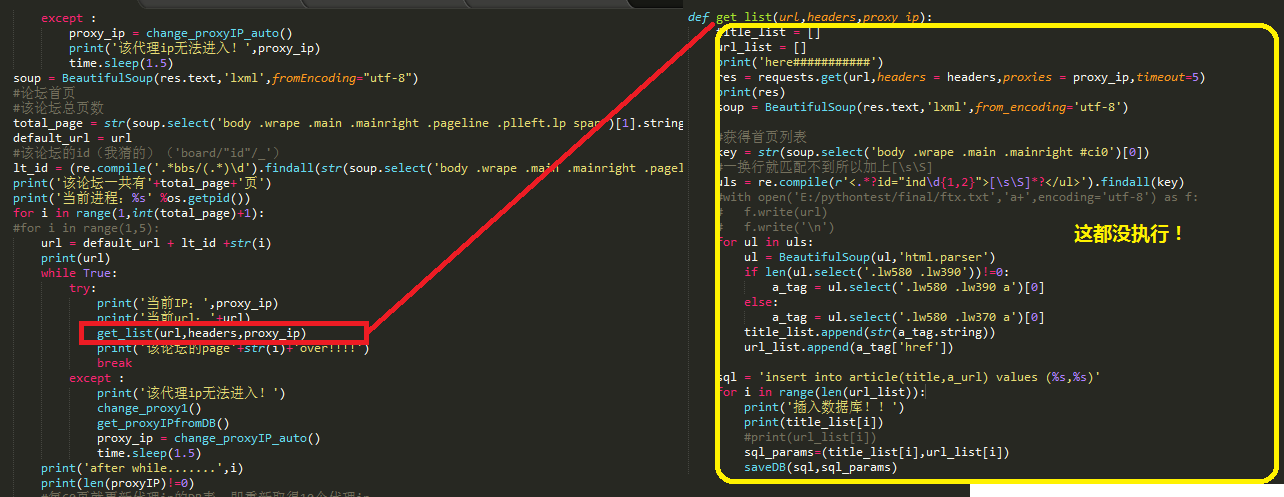

def get_list(url,headers,proxy_ip):

title_list = []

url_list = []

res = requests.get(url,headers = headers,proxies = proxy_ip)

soup = BeautifulSoup(res.text,'lxml',from_encoding='utf-8')

#获得首页列表

key = str(soup.select('body .wrape .main .mainright #ci0')[0])

#一换行就匹配不到所以加上[\s\S]

uls = re.compile(r'<.*?id="ind\d{1,2}">[\s\S]*?</ul>').findall(key)

#with open('E:/pythontest/final/ftx.txt','a+',encoding='utf-8') as f:

# f.write(url)

# f.write('\n')

for ul in uls:

ul = BeautifulSoup(ul,'html.parser')

if len(ul.select('.lw580 .lw390'))!=0:

a_tag = ul.select('.lw580 .lw390 a')[0]

else:

a_tag = ul.select('.lw580 .lw370 a')[0]

title_list.append(str(a_tag.string))

url_list.append(a_tag['href'])

sql = 'insert into article(title,a_url) values (%s,%s)'

for i in range(len(url_list)):

print('插入数据库!!')

print(title_list[i])

#print(url_list[i])

sql_params=(title_list[i],url_list[i])

saveDB(sql,sql_params)

def get_LIST(url,headers,proxy_ip):

print('get_LIST!!')

while True:

try:

print('当前IP:',proxy_ip)

print('当前url:'+url)

res = requests.get(url,headers = headers,proxies = proxy_ip,timeout=3)

print('该论坛'+'start!!!!')

break

except :

proxy_ip = change_proxyIP_auto()

print('该代理ip无法进入!',proxy_ip)

time.sleep(1.5)

soup = BeautifulSoup(res.text,'lxml',fromEncoding="utf-8")

#论坛首页

#该论坛总页数

total_page = str(soup.select('body .wrape .main .mainright .pageline .plleft.lp span')[1].string)

default_url = url

#该论坛的id(我猜的)('board/"id"/_')

lt_id = (re.compile('.*bbs/(.*)\d').findall(str(soup.select('body .wrape .main .mainright .pageline .page .pagefy a')[0]['href'])))[0]

print('该论坛一共有'+total_page+'页')

print('当前进程:'+os.getpid())

for i in range(1,int(total_page)+1):

#for i in range(1,5):

url = default_url + lt_id +str(i)

print(url)

while True:

try:

print('当前IP:',proxy_ip)

print('当前url:'+url)

get_list(url,headers,proxy_ip)

print('该论坛的page'+str(i)+'over!!!!')

break

except :

print('该代理ip无法进入!')

proxy_ip = change_proxyIP_auto()

time.sleep(1.5)

#每60页就更新代理ip的DB表,即重新取得10个代理ip

if i%(len(proxyIP)*20)==0:

print('@@@@@@@@@@@@@@@@@@@@@get_LIST:每60页就更新代理ip的DB表,即重新取得10个代理ip')

change_proxy1()

get_proxyIPfromDB()

#每10页就换代理ip(换的是requests.get(proxies))

if i%10==0:

proxy_ip = change_proxyIP_auto()

def get_luntan(url,headers):

lt_name = []

global lt_url

lt_url = []

article_count = []

res = requests.get(url,headers = headers)

soup = BeautifulSoup(res.text,'lxml',from_encoding='utf-8')

lt_list = soup.select('body .articleAre2 .box48L .box13Menu2')

#逐个爬取论坛

for lt in lt_list:

tags = lt.select('.box48MenuR .box48ListRL a')

lt_name.append(str(tags[0].string))

lt_url.append(tags[0]['href'])

article_count.append(str(lt.select('.box48MenuR .box48ListRR3 .s1')[2].string))

#将论坛地址存入数据库

for i in range(len(lt_name)):

sql = 'insert into luntan(lt_name,lt_url,article_count) values (%s,%s,%s)'

sql_params = (lt_name[i],lt_url[i],article_count[i])

saveDB(sql,sql_params)

print(len(lt_url))

def saveDB(sql,sql_params):

database = pymysql.connect(host="localhost",port=3306,user="zoe",passwd="1235789y",db="fangtianxiadb",charset="utf8")

cursor = database.cursor()

try:

cursor.execute(sql,sql_params)

database.commit()

database.close()

except Exception as e:

#如果发生错误就回滚

print(e+'error sql')

database.rollback()

def fangtianxiaSpider():

database = pymysql.connect(host="localhost",port=3306,user="zoe",passwd="1235789y",db="fangtianxiadb",charset="utf8")

#清空代理ip数据库表,重新载入

#爬取论坛列表

init_url = 'http://bbs.fang.com/asp/bbs/bbs_forumsort.aspx?type=all'

headers = change_UserAgent_auto(init_url)

get_proxyIPfromDB()

proxy_ip = change_proxyIP_auto()

get_luntan(init_url,headers)

print('******论坛列表爬取完毕******')

#论坛列表,逐个爬取论坛

#思想:多进程爬取论坛,开4个进程同时爬4个论坛,每个论坛中单进程爬文章列表

p = Pool(4)

for lt in lt_url:

print('当前所在论坛是:',lt)

headers = change_UserAgent_auto(lt)

p.apply_async(get_LIST,args = (lt,headers,proxy_ip,))

p.close()

p.join()

#爬取文章列表完毕

#爬取文章内容,存入content表

get_article(database,headers)

if __name__ == '__main__':

change_proxy1()

get_proxyIPfromDB()

fangtianxiaSpider()