684

社区成员

发帖

发帖 与我相关

与我相关 我的任务

我的任务 分享

分享

function [X_norm, mu, sigma] = featureNormalize(X)

format short

X_norm = X;

mu = zeros(1, size(X, 2));

sigma = zeros(1, size(X, 2));

m = size(X_norm,1);

%求特征的平均值

%for i = 1:m

% mu(1) = mu(1) + X_norm(m,1);

% mu(2) = mu(2) + X_norm(m,2);

%end

%mu(1) = mu(1)/m;

%mu(2) = mu(2)/m;

mu = sum(X_norm)/m;&求特征平均值

%求特征的标准差

% for i = 1:m

% sigma(1) = sigma(1) + (X_norm(m,1) - mu(1))^2;

% sigma(2) = sigma(2) + (X_norm(m,2) - mu(2))*^2;

% end

%求特征标准差

X_norm(:,1) = X_norm(:,1) - mu(1);

X_norm(:,2) = X_norm(:,2) - mu(2);

sigma = sum(X_norm.^2);

sigma(1) = sqrt(sigma(1)/m);

sigma(2) = sqrt(sigma(2)/m);

%特征归一化

% for i = 1:m

% X_norm(m,1) = (X_norm(m,1)-mu(1))/sigma(1);

% X_norm(m,2) = (X_norm(m,2)-mu(2))/sigma(2);

% end

%特征归一化

X_norm(:,1) = X_norm(:,1)/sigma(1);

X_norm(:,2) = X_norm(:,2)/sigma(2);

X_norm

%

function J = computeCostMulti(X, y, theta)

format short

m = length(y);

% J = ((X*theta-y)')*(X*theta-y)/(2*m);

% sum = 0;

% for i = 1:m

% sum = sum + (X(i,:)*theta - y(i))^2;

% end

% J = sum/(2*m);

X = X*theta - y;

J = sum(X.^2)/(2*m);

end

function [theta, J_history] = gradientDescentMulti(X, y, theta, alpha, num_iters)

m = length(y); % number of training examples

J_history = zeros(num_iters, 1);

n = size(X,2);

temp = zeros(n,1);

for iter = 1:num_iters

for j = 1:n

for i = 1:m

x = X(i,:);

temp(j) = temp(j) + (x*theta-y(i))*x(j);

end

end

theta = theta - temp* alpha/m;

J_history(iter) = computeCostMulti(X, y, theta);

end

end

clear ; close all; clc

fprintf('Loading data ...\n');

data = load('data.txt');

X = data(:, 1:2);

y = data(:, 3);

m = length(y);

fprintf('前10个训练数据:\n');

fprintf(' x = [%.0f %.0f], y = %.0f \n', [X(1:10,:) y(1:10,:)]');

fprintf('Program paused. Press enter to continue.\n');

pause;

fprintf('Normalizing Features ...\n');

[X_norm mu sigma] = featureNormalize(X);

X_norm = [ones(m, 1) X_norm];

fprintf('Running gradient descent ...\n');

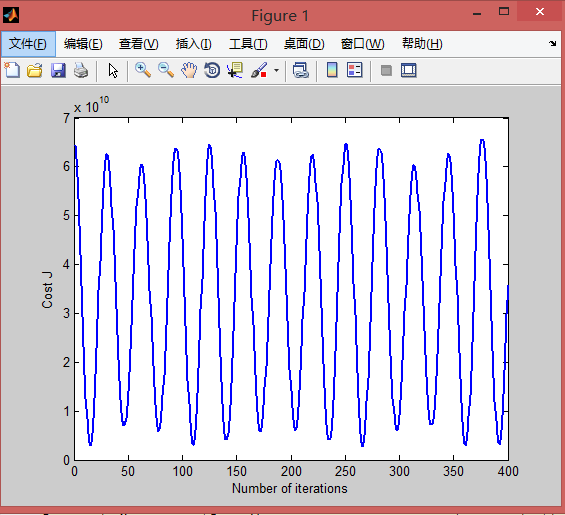

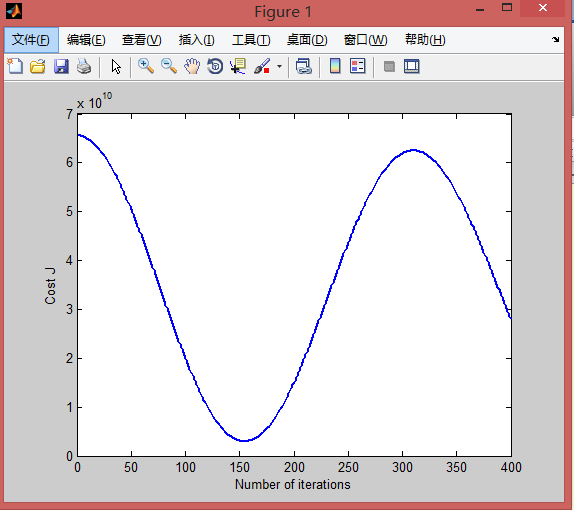

alpha = 0.001;

num_iters = 400;

theta = zeros(3, 1);

J0 = computeCostMulti(X_norm,y,theta)

[theta, J_history] = gradientDescentMulti(X_norm, y, theta, alpha, num_iters);

figure;

plot(1:numel(J_history), J_history, '-', 'LineWidth', 2);

xlabel('Number of iterations');

ylabel('Cost J');

fprintf(' %f \n', theta);

fprintf('\n');