791

社区成员

发帖

发帖 与我相关

与我相关 我的任务

我的任务 分享

分享AVFormatContext *ofmt_ctx;

AVStream* video_st;

//视音频流对应的结构体,用于视音频编解码。

AVCodecContext* pCodecCtx;

AVCodec* pCodec;

AVPacket enc_pkt; // 存储压缩数据(视频对应H.264等码流数据,音频对应AAC/MP3等码流数据)

AVFrame *pFrameYUV; // 存储非压缩的数据(视频对应RGB/YUV像素数据,音频对应PCM采样数据)

int framecnt = 0;

int yuv_width;

int yuv_height;

int y_length;

int uv_length;

int64_t start_time;

//const char* out_path = "rtmp://192.168.2.176/live/livestream";

//Output FFmpeg's av_log()

void custom_log(void *ptr, int level, const char* fmt, va_list vl) {

FILE *fp = fopen("/storage/emulated/0/av_log.txt", "a+");

if (fp) {

vfprintf(fp, fmt, vl);

fflush(fp);

fclose(fp);

}

}

JNIEXPORT jint JNICALL Java_com_zhanghui_test_MainActivity_initial(JNIEnv *env,

jobject obj, jint width, jint height) {

const char* out_path = "rtmp://192.168.2.176/live/livestream";

yuv_width = width;

yuv_height = height;

y_length = width * height;

uv_length = width * height / 4;

//FFmpeg av_log() callback

av_log_set_callback(custom_log);

av_register_all();

avformat_network_init();

//output initialize

avformat_alloc_output_context2(&ofmt_ctx, NULL, "flv", out_path);

//output encoder initialize

//函数的参数是一个解码器的ID,返回查找到的解码器(没有找到就返回NULL)。

pCodec = avcodec_find_encoder(AV_CODEC_ID_H264);

if (!pCodec) {

LOGE("Can not find encoder!\n");

return -1;

}

pCodecCtx = avcodec_alloc_context3(pCodec);

pCodecCtx->pix_fmt = PIX_FMT_YUV420P;

pCodecCtx->width = width;

pCodecCtx->height = height;

pCodecCtx->time_base.num = 1;

pCodecCtx->time_base.den = 25;

pCodecCtx->bit_rate = 400000;

pCodecCtx->gop_size = 250;

/* Some formats want stream headers to be separate. */

if (ofmt_ctx->oformat->flags & AVFMT_GLOBALHEADER)

pCodecCtx->flags |= CODEC_FLAG_GLOBAL_HEADER;

//H264 codec param

//pCodecCtx->me_range = 16;

//pCodecCtx->max_qdiff = 4;

//pCodecCtx->qcompress = 0.6;

pCodecCtx->qmin = 10;

pCodecCtx->qmax = 51;

//Optional Param

pCodecCtx->max_b_frames = 1;

// Set H264 preset and tune

AVDictionary *param = 0;

// av_dict_set(¶m, "preset", "ultrafast", 0);

// av_dict_set(¶m, "tune", "zerolatency", 0);

av_opt_set(pCodecCtx->priv_data, "preset", "superfast", 0);

av_opt_set(pCodecCtx->priv_data, "tune", "zerolatency", 0);

//打开编码器

if (avcodec_open2(pCodecCtx, pCodec, ¶m) < 0) {

LOGE("Failed to open encoder!\n");

return -1;

}

//Add a new stream to output,should be called by the user before avformat_write_header() for muxing

video_st = avformat_new_stream(ofmt_ctx, pCodec);

if (video_st == NULL) {

return -1;

}

video_st->time_base.num = 1;

video_st->time_base.den = 25;

video_st->codec = pCodecCtx;

//Open output URL,set before avformat_write_header() for muxing

if (avio_open(&ofmt_ctx->pb, out_path, AVIO_FLAG_READ_WRITE) < 0) {

LOGE("Failed to open output file!\n");

return -1;

}

//Write File Header

avformat_write_header(ofmt_ctx, NULL);

start_time = av_gettime();

return 0;

}

JNIEXPORT jint JNICALL Java_com_zhanghui_test_MainActivity_encode(JNIEnv *env,

jobject obj, jbyteArray yuv) {

int ret;

int enc_got_frame = 0;

int i = 0;

// 为解码帧分配内存

pFrameYUV = avcodec_alloc_frame();

uint8_t *out_buffer = (uint8_t *) av_malloc(

avpicture_get_size(PIX_FMT_YUV420P, pCodecCtx->width,

pCodecCtx->height));

avpicture_fill((AVPicture *) pFrameYUV, out_buffer, PIX_FMT_YUV420P,

pCodecCtx->width, pCodecCtx->height);

//安卓摄像头数据为NV21格式,此处将其转换为YUV420P格式

jbyte* in = (jbyte*) (*env)->GetByteArrayElements(env, yuv, 0);

memcpy(pFrameYUV->data[0], in, y_length);

for (i = 0; i < uv_length; i++) {

*(pFrameYUV->data[2] + i) = *(in + y_length + i * 2);

*(pFrameYUV->data[1] + i) = *(in + y_length + i * 2 + 1);

}

pFrameYUV->format = AV_PIX_FMT_YUV420P;

pFrameYUV->width = yuv_width;

pFrameYUV->height = yuv_height;

enc_pkt.data = NULL;

enc_pkt.size = 0;

// 定义AVPacket对象后,请使用av_init_packet进行初始化

av_init_packet(&enc_pkt);

/** 编码一帧视频数据

* int avcodec_encode_video2(AVCodecContext *avctx, AVPacket *avpkt,

const AVFrame *frame, int *got_packet_ptr);

该函数每个参数的含义在注释里面已经写的很清楚了,在这里用中文简述一下:

avctx:编码器的AVCodecContext。

avpkt:编码输出的AVPacket。

frame:编码输入的AVFrame。

got_packet_ptr:成功编码一个AVPacket的时候设置为1。

函数返回0代表编码成功。

*/

ret = avcodec_encode_video2(pCodecCtx, &enc_pkt, pFrameYUV, &enc_got_frame);

av_frame_free(&pFrameYUV);

if (enc_got_frame == 1) {

LOGI("Succeed to encode frame: %5d\tsize:%5d\n", framecnt,

enc_pkt.size);

framecnt++;

//标识该AVPacket所属的视频/音频流。

enc_pkt.stream_index = video_st->index; //标识该视频/音频流

//Write PTS

AVRational time_base = ofmt_ctx->streams[0]->time_base; //{ 1, 1000 };

AVRational r_framerate1 = { 60, 2 }; //{ 50, 2 };

AVRational time_base_q = { 1, AV_TIME_BASE };

//Duration between 2 frames (us)

int64_t calc_duration = (double) (AV_TIME_BASE)

* (1 / av_q2d(r_framerate1)); //内部时间戳

//Parameters

//enc_pkt.pts = (double)(framecnt*calc_duration)*(double)(av_q2d(time_base_q)) / (double)(av_q2d(time_base));

enc_pkt.pts = av_rescale_q(framecnt * calc_duration, time_base_q,

time_base);

enc_pkt.dts = enc_pkt.pts;

enc_pkt.duration = av_rescale_q(calc_duration, time_base_q, time_base); //(double)(calc_duration)*(double)(av_q2d(time_base_q)) / (double)(av_q2d(time_base));

enc_pkt.pos = -1;

//Delay

int64_t pts_time = av_rescale_q(enc_pkt.dts, time_base, time_base_q);

int64_t now_time = av_gettime() - start_time;

if (pts_time > now_time)

av_usleep(pts_time - now_time);

ret = av_interleaved_write_frame(ofmt_ctx, &enc_pkt);

av_free_packet(&enc_pkt);

}

// output(ofmt_ctx);

return 0;

}

JNIEXPORT jint JNICALL Java_com_zhanghui_test_MainActivity_flush(JNIEnv *env,

jobject obj) {

int ret;

int got_frame;

AVPacket enc_pkt;

if (!(ofmt_ctx->streams[0]->codec->codec->capabilities & CODEC_CAP_DELAY))

return 0;

while (1) {

enc_pkt.data = NULL;

enc_pkt.size = 0;

av_init_packet(&enc_pkt);

ret = avcodec_encode_video2(ofmt_ctx->streams[0]->codec, &enc_pkt, NULL,

&got_frame);

if (ret < 0)

break;

if (!got_frame) {

ret = 0;

break;

}

LOGI("Flush Encoder: Succeed to encode 1 frame!\tsize:%5d\n",

enc_pkt.size);

//Write PTS

AVRational time_base = ofmt_ctx->streams[0]->time_base; //{ 1, 1000 };

AVRational r_framerate1 = { 60, 2 };

AVRational time_base_q = { 1, AV_TIME_BASE };

//Duration between 2 frames (us)

int64_t calc_duration = (double) (AV_TIME_BASE)

* (1 / av_q2d(r_framerate1)); //内部时间戳

//Parameters

enc_pkt.pts = av_rescale_q(framecnt * calc_duration, time_base_q,

time_base);

enc_pkt.dts = enc_pkt.pts;

enc_pkt.duration = av_rescale_q(calc_duration, time_base_q, time_base);

//转换PTS/DTS(Convert PTS/DTS)

enc_pkt.pos = -1;

framecnt++;

ofmt_ctx->duration = enc_pkt.duration * framecnt;

/* mux encoded frame */

ret = av_interleaved_write_frame(ofmt_ctx, &enc_pkt);

if (ret < 0)

break;

}

//Write file trailer

av_write_trailer(ofmt_ctx);

return 0;

}

JNIEXPORT jint JNICALL Java_com_zhanghui_test_MainActivity_close(JNIEnv *env,

jobject obj) {

if (video_st)

avcodec_close(video_st->codec);

avio_close(ofmt_ctx->pb);

avformat_free_context(ofmt_ctx);

return 0;

}

```

,还有就是编译那个C文件会出错

,还有就是编译那个C文件会出错[code=java]public class MainActivity extends Activity {

private static final String TAG = "MainActivity";

private Button mTakeButton;

private Button mPlayButton;

private Camera mCamera;

private SurfaceView mSurfaceView;

private SurfaceHolder mSurfaceHolder;

private boolean isRecording = false;

private int i = 0;

private class StreamTask extends AsyncTask<Void, Void, Void> {

private byte[] mData;

// 构造函数

StreamTask(byte[] data) {

this.mData = data;

}

@Override

protected Void doInBackground(Void... params) {

// TODO Auto-generated method stub

if (mData != null) {

Log.i(">>>" , Arrays.toString(mData));

encode(mData);

}

return null;

}

}

private StreamTask mStreamTask;

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

final Camera.PreviewCallback mPreviewCallbacx = new Camera.PreviewCallback() {

@Override

public void onPreviewFrame(byte[] arg0, Camera arg1) {

// TODO Auto-generated method stub

if (null != mStreamTask) {

switch (mStreamTask.getStatus()) {

case RUNNING:

return;

case PENDING:

mStreamTask.cancel(false);

break;

default:

break;

}

}

// if(arg0 != null ){

// for (int i = 0; i < arg0.length; i++) {

// Log.e(">>>", arg0[i]+"");

// }

// }

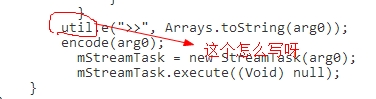

util.e(">>", Arrays.toString(arg0));

encode(arg0);

// mStreamTask = new StreamTask(arg0);

// mStreamTask.execute((Void) null);

}

};

mTakeButton = (Button) findViewById(R.id.take_button);

mPlayButton = (Button) findViewById(R.id.play_button);

PackageManager pm = this.getPackageManager();

boolean hasCamera = pm.hasSystemFeature(PackageManager.FEATURE_CAMERA)

|| pm.hasSystemFeature(PackageManager.FEATURE_CAMERA_FRONT)

|| Build.VERSION.SDK_INT < Build.VERSION_CODES.GINGERBREAD;

if (!hasCamera)

mTakeButton.setEnabled(false);

mTakeButton.setOnClickListener(new View.OnClickListener() {

@Override

public void onClick(View arg0) {

// TODO Auto-generated method stub

if (mCamera != null) {

if (isRecording) {

mTakeButton.setText("Start");

mCamera.setPreviewCallback(null);

Toast.makeText(MainActivity.this, "encode done",

Toast.LENGTH_SHORT).show();

isRecording = false;

} else {

mTakeButton.setText("Stop");

initial(mCamera.getParameters().getPreviewSize().width,

mCamera.getParameters().getPreviewSize().height);

// stream("rtmp://192.168.2.176/live/livestream");

mCamera.setPreviewCallback(mPreviewCallbacx);

isRecording = true;

}

}

}

});

mPlayButton.setOnClickListener(new OnClickListener() {

@Override

public void onClick(View arg0) {

String folderurl = Environment.getExternalStorageDirectory()

.getPath();

String inputurl = folderurl + "/" + "aa.flv";

// stream("rtmp://192.168.2.176/live/livestream");

}

});

mSurfaceView = (SurfaceView) findViewById(R.id.surfaceView1);

SurfaceHolder holder = mSurfaceView.getHolder();

holder.setType(SurfaceHolder.SURFACE_TYPE_PUSH_BUFFERS);

holder.addCallback(new SurfaceHolder.Callback() {

@Override

public void surfaceDestroyed(SurfaceHolder arg0) {

// TODO Auto-generated method stub

if (mCamera != null) {

mCamera.stopPreview();

mSurfaceView = null;

mSurfaceHolder = null;

}

}

@Override

public void surfaceCreated(SurfaceHolder arg0) {

// TODO Auto-generated method stub

try {

if (mCamera != null) {

mCamera.setPreviewDisplay(arg0);

mSurfaceHolder = arg0;

}

} catch (IOException exception) {

Log.e(TAG, "Error setting up preview display", exception);

}

}

@Override

public void surfaceChanged(SurfaceHolder arg0, int arg1, int arg2,

int arg3) {

// TODO Auto-generated method stub

if (mCamera == null)

return;

Camera.Parameters parameters = mCamera.getParameters();

parameters.setPreviewSize(640, 480);

parameters.setPictureSize(640, 480);

mCamera.setParameters(parameters);

try {

mCamera.startPreview();

mSurfaceHolder = arg0;

} catch (Exception e) {

Log.e(TAG, "could not start preview", e);

mCamera.release();

mCamera = null;

}

}

});

}

@TargetApi(9)

@Override

protected void onResume() {

super.onResume();

if (Build.VERSION.SDK_INT >= Build.VERSION_CODES.GINGERBREAD) {

mCamera = Camera.open(0);

} else {

mCamera = Camera.open();

}

}

@Override

protected void onPause() {

super.onPause();

flush();

close();

if (mCamera != null) {

mCamera.release();

mCamera = null;

}

}

// JNI

public native int initial(int width, int height);

public native int encode(byte[] yuvimage);

public native int flush();

public native int close();

// JNI

// public native int stream(String outputurl);

static {

System.loadLibrary("avutil-54");

System.loadLibrary("swresample-1");

System.loadLibrary("avcodec-56");

System.loadLibrary("avformat-56");

System.loadLibrary("swscale-3");

System.loadLibrary("postproc-53");

System.loadLibrary("avfilter-5");

System.loadLibrary("avdevice-56");

System.loadLibrary("encode");

}

}