环境:ubuntu16.0.4(server版),jdk1.8.0_111,hadoop-2.7.3,hive-2.1.1

我先在单机伪分布模式下已经安装了Hive-2.1.1,所有软件及版本都是一样的,只是用的系统是ubuntu16.0.4(桌面版)。通过测试后。想在完全分布式环境下再测试一遍,结果启动hive时报错了。

我的hadoop集群没问题,是成功启动了的。

集群节点是:

Master 192.168.8.4

Slave1 192.168.8.5

Slave2 192.168.8.6

我是按照一些网上的教程安装的:

hive-site.xml配置是:

<configuration>

<!-- WARNING!!! This file is auto generated for documentation purposes ONLY! -->

<!-- WARNING!!! Any changes you make to this file will be ignored by Hive. -->

<!-- WARNING!!! You must make your changes in hive-site.xml instead. -->

<!-- Hive Execution Parameters -->

<property>

<name>hive.metastore.warehouse.dir</name>

<value>/user/hive/warehouse</value>

<description>location of default database for the warehouse</description>

</property>

<property>

<name>hive.metastore.local</name>

<value>true</value>

</property>

<property>

<name>hive.querylog.location</name>

<value>/usr/local/apache-hive-2.1.1-bin/log</value>

<description>Location of Hive run time structured log file</description>

</property>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://192.168.8.4:3306/hive?createDatabaseIfNotExist=true</value>

<description>JDBC connect string for a JDBC metastore</description>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

<description>Driver class name for a JDBC metastore</description>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>hive</value>

<description>username to use against metastore database</description>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>123456</value>

<description>password to use against metastore database</description>

</property>

</configuration>

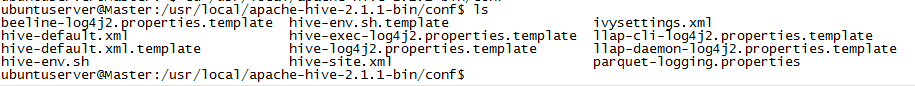

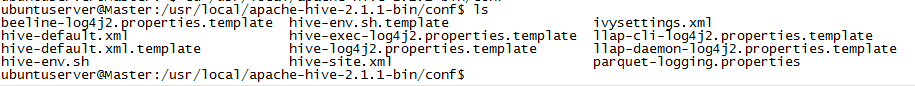

hive-env.sh

# export HADOOP_HEAPSIZE=1024

export HADOOP_HEAPSIZE=1024

#

# Larger heap size may be required when running queries over large number of files or partitions.

# By default hive shell scripts use a heap size of 256 (MB). Larger heap size would also be

# appropriate for hive server (hwi etc).

# Set HADOOP_HOME to point to a specific hadoop install directory

#HADOOP_HOME=${bin}/../../hadoop

HADOOP_HOME=/usr/local/hadoop

# Hive Configuration Directory can be controlled by:

# export HIVE_CONF_DIR=

export HIVE_CONF_DIR=/usr/local/apache-hive-2.1.1-bin/conf

# Folder containing extra ibraries required for hive compilation/execution can be controlled by:

# export HIVE_AUX_JARS_PATH=

export HIVE_AUX_JARS_PATH=/usr/local/apache-hive-2.1.1-bin/lib

/etc/profile中环境变量配置:

export JAVA_HOME=/usr/local/jdk1.8.0_111

export JRE_HOME=$JAVA_HOME/jre

export HIVE_HOME=/usr/local/apache-hive-2.1.1-bin

export HADOOP_HOME=/usr/local/hadoop

export CLASSPATH=.:$JAVA_HOME/lib:$JRE_HOME/lib

export PATH=$JAVA_HOME/bin:$PATH:/usr/local/hadoop/bin:/usr/local/hadoop/sbin:$HIVE_HOME/bin

export HADOOP_COMMON_LIB_NATIVE_DIR=/usr/local/hadoop/lib/native

export HADOOP_OPTS="-Djava.library.path=/usr/local/hadoop/lib"

但是启动的时候,报如下的错误及提示信息:

ubuntuserver@Master:~$ hive

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/local/apache-hive-2.1.1-bin/lib/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

[Fatal Error] :-1:-1: Premature end of file.

Exception in thread "main" java.lang.RuntimeException: org.xml.sax.SAXParseException; Premature end of file.

at org.apache.hadoop.conf.Configuration.loadResource(Configuration.java:2645)

at org.apache.hadoop.conf.Configuration.loadResources(Configuration.java:2492)

at org.apache.hadoop.conf.Configuration.getProps(Configuration.java:2405)

at org.apache.hadoop.conf.Configuration.set(Configuration.java:1143)

at org.apache.hadoop.conf.Configuration.set(Configuration.java:1115)

at org.apache.hadoop.mapred.JobConf.setJar(JobConf.java:520)

at org.apache.hadoop.mapred.JobConf.setJarByClass(JobConf.java:538)

at org.apache.hadoop.mapred.JobConf.<init>(JobConf.java:432)

at org.apache.hadoop.hive.conf.HiveConf.initialize(HiveConf.java:3694)

at org.apache.hadoop.hive.conf.HiveConf.<init>(HiveConf.java:3652)

at org.apache.hadoop.hive.common.LogUtils.initHiveLog4jCommon(LogUtils.java:82)

at org.apache.hadoop.hive.common.LogUtils.initHiveLog4j(LogUtils.java:66)

at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:657)

at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:641)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.util.RunJar.run(RunJar.java:221)

at org.apache.hadoop.util.RunJar.main(RunJar.java:136)

Caused by: org.xml.sax.SAXParseException; Premature end of file.

at org.apache.xerces.parsers.DOMParser.parse(Unknown Source)

at org.apache.xerces.jaxp.DocumentBuilderImpl.parse(Unknown Source)

at javax.xml.parsers.DocumentBuilder.parse(DocumentBuilder.java:150)

at org.apache.hadoop.conf.Configuration.parse(Configuration.java:2480)

at org.apache.hadoop.conf.Configuration.parse(Configuration.java:2468)

at org.apache.hadoop.conf.Configuration.loadResource(Configuration.java:2539)

... 19 more

ubuntuserver@Master:~$

我看了相关的xml配置文档,应该没错误的地方的,不知道是哪里配置不对。在单机环境下都能正常启动。

请大家帮忙分析下是咋回事。。

发帖

发帖 与我相关

与我相关 我的任务

我的任务 分享

分享