public class RunHadoop {

public static void main(String[] args){

//TODO 这里写运行mapperReduce的方法

//包路径 E:\ideaworkspace\hadooptest\out\artifacts\hadooptest_jar

//创建目录

//String folderName = "/test";

//Files.mkdirFolder(folderName);

//创建word_input目录

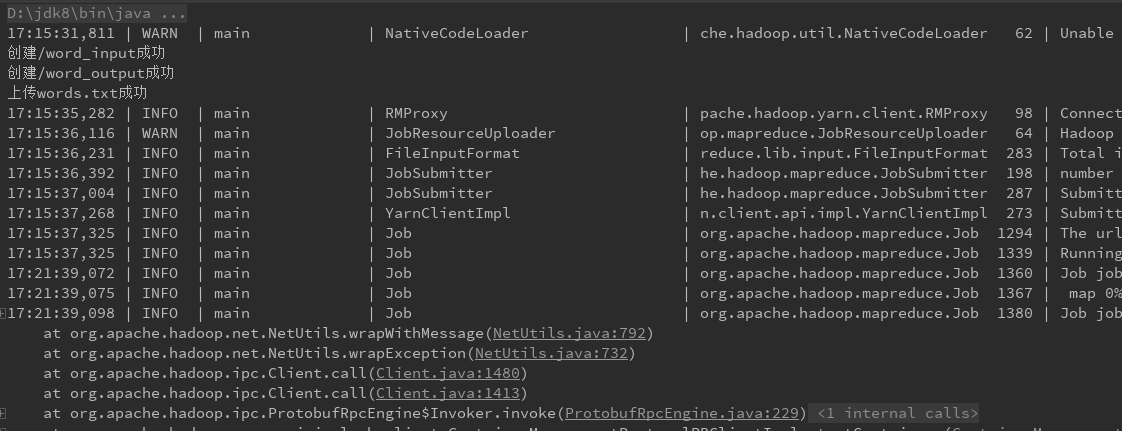

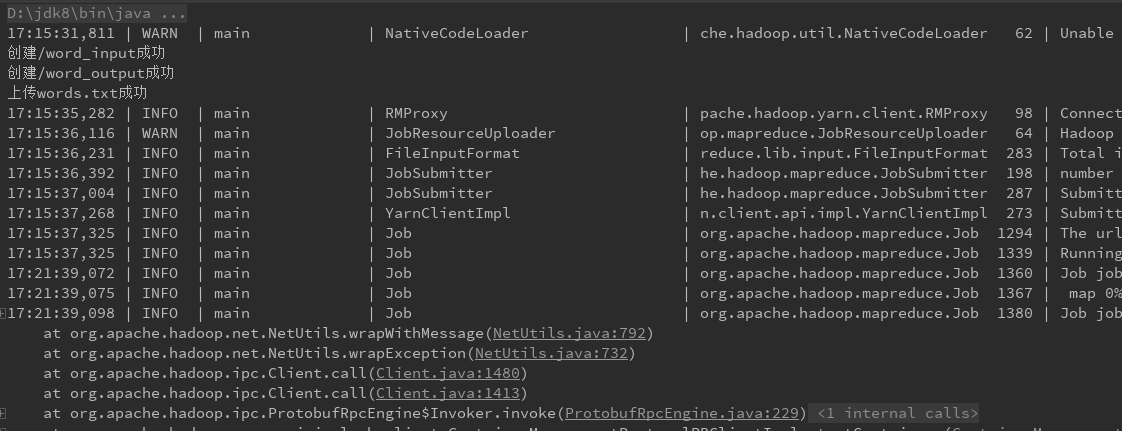

String folderName = "/word_input";

Files.mkdirFolder(folderName);

System.out.println("创建" + folderName + "成功");

//创建word_input目录

folderName = "/word_output";

Files.mkdirFolder(folderName);

System.out.println("创建" + folderName + "成功");

//上传文件

String localPath = "E:\\Hadoop\\upload\\";

String fileName = "words.txt";

String hdfsPath = "/word_input/";

Files.uploadFile(localPath, fileName, hdfsPath);

System.out.println("上传" + fileName + "成功");

//执行wordcount

int result = -1;

try{

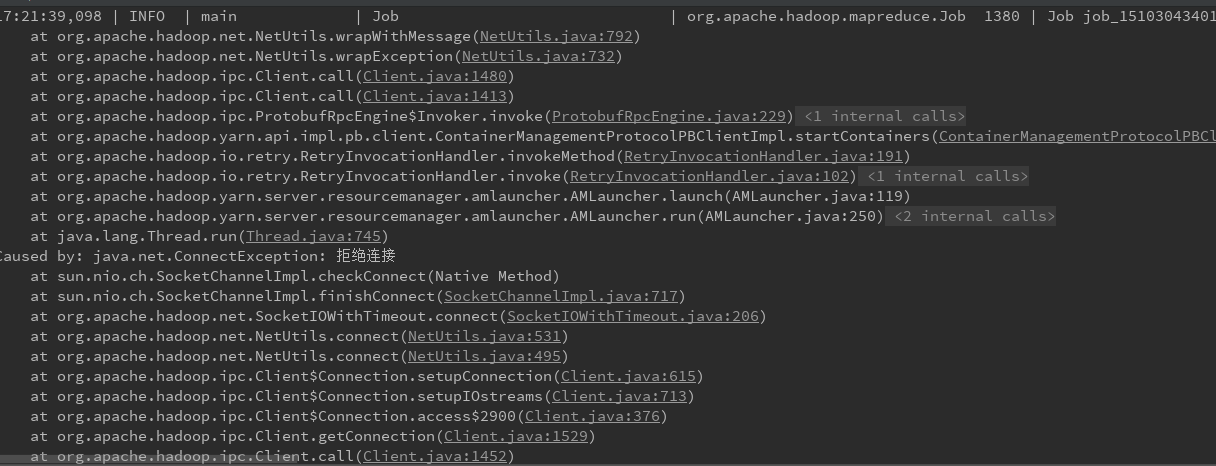

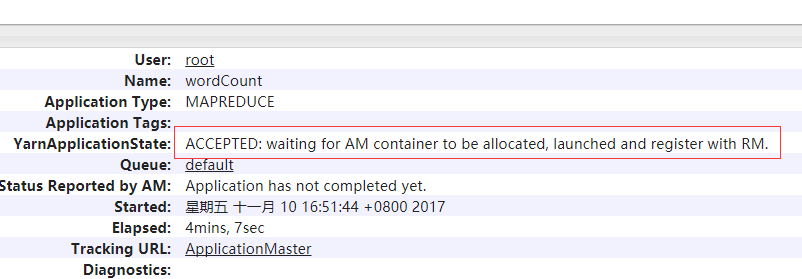

result = new WordCountMR().run();

}catch (Exception e){

System.out.println(e.getMessage());

}

if (result == 1) {

System.out.println("");

//成功后下载文件到本地

String downPath = "/word_output/";

String downName ="part-r-00000";

String savePath = "E:\\Hadoop\\download\\";

Files.getFileFromHadoop(downPath,downName,savePath);

System.out.println("执行成功并将结果保存到"+savePath);

} else {

System.out.println("执行失败");

}

}

}

发帖

发帖 与我相关

与我相关 我的任务

我的任务 分享

分享