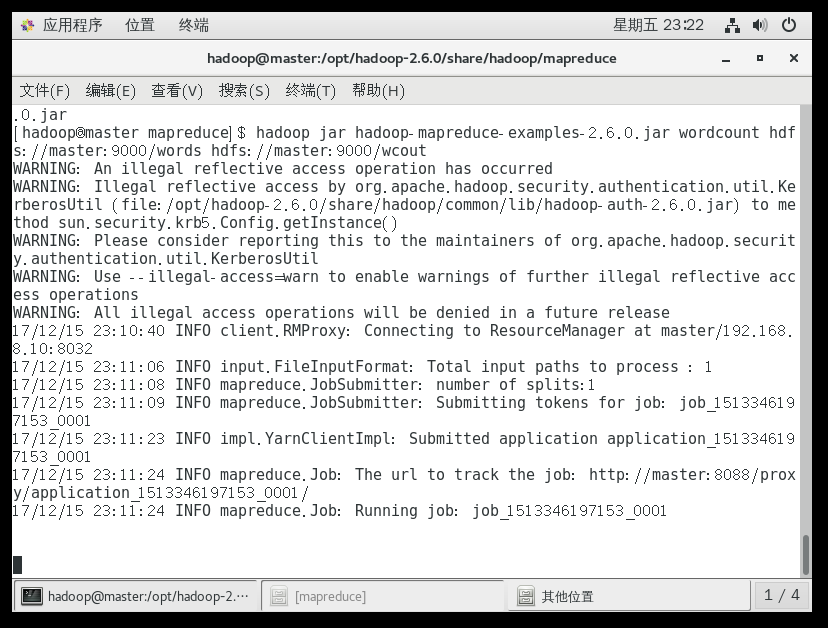

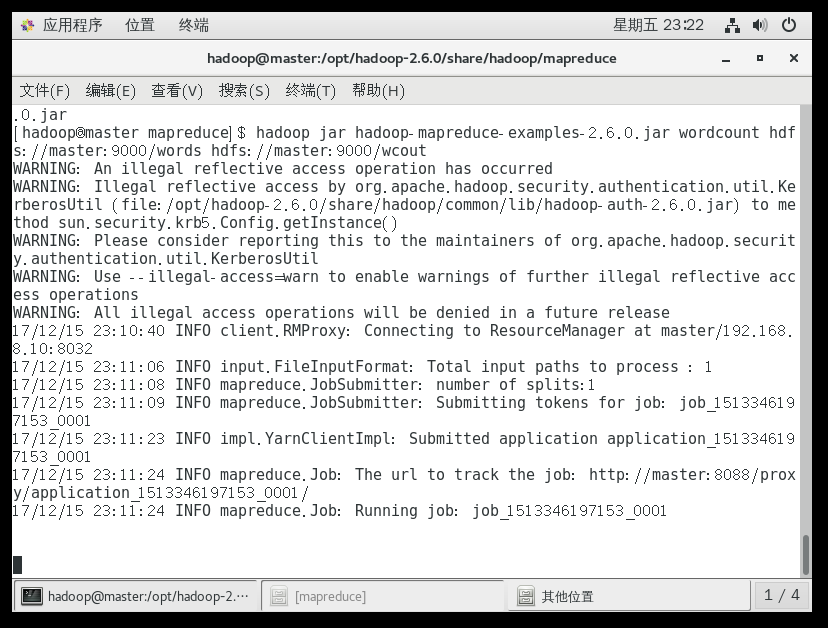

wordcount程序运行如下

master和slave上的节点启动都正常,可以往hdfs上传文件,就是运行wordcount程序一直卡住,新手,看不懂日志文件,求帮忙

namenode部分日志如下:

STARTUP_MSG: build = https://git-wip-us.apache.org/repos/asf/hadoop.git -r e3496499ecb8d220fba99dc5ed4c99c8f9e33bb1; compiled by 'jenkins' on 2014-11-13T21:10Z

STARTUP_MSG: java = 9.0.1

************************************************************/

2017-12-19 20:18:56,107 INFO org.apache.hadoop.hdfs.server.namenode.NameNode: registered UNIX signal handlers for [TERM, HUP, INT]

2017-12-19 20:18:56,184 INFO org.apache.hadoop.hdfs.server.namenode.NameNode: createNameNode []

2017-12-19 20:18:58,179 INFO org.apache.hadoop.metrics2.impl.MetricsConfig: loaded properties from hadoop-metrics2.properties

2017-12-19 20:19:02,780 INFO org.apache.hadoop.metrics2.impl.MetricsSystemImpl: Scheduled snapshot period at 10 second(s).

2017-12-19 20:19:02,780 INFO org.apache.hadoop.metrics2.impl.MetricsSystemImpl: NameNode metrics system started

2017-12-19 20:19:02,783 INFO org.apache.hadoop.hdfs.server.namenode.NameNode: fs.defaultFS is hdfs://master:9000

2017-12-19 20:19:02,784 INFO org.apache.hadoop.hdfs.server.namenode.NameNode: Clients are to use master:9000 to access this namenode/service.

2017-12-19 20:19:39,623 INFO org.apache.hadoop.hdfs.DFSUtil: Starting Web-server for hdfs at: http://0.0.0.0:50070

2017-12-19 20:19:47,900 INFO org.mortbay.log: Logging to org.slf4j.impl.Log4jLoggerAdapter(org.mortbay.log) via org.mortbay.log.Slf4jLog

2017-12-19 20:19:48,538 INFO org.apache.hadoop.http.HttpRequestLog: Http request log for http.requests.namenode is not defined

2017-12-19 20:19:50,610 INFO org.apache.hadoop.http.HttpServer2: Added global filter 'safety' (class=org.apache.hadoop.http.HttpServer2$QuotingInputFilter)

2017-12-19 20:19:51,139 INFO org.apache.hadoop.http.HttpServer2: Added filter static_user_filter (class=org.apache.hadoop.http.lib.StaticUserWebFilter$StaticUserFilter) to context hdfs

2017-12-19 20:19:51,139 INFO org.apache.hadoop.http.HttpServer2: Added filter static_user_filter (class=org.apache.hadoop.http.lib.StaticUserWebFilter$StaticUserFilter) to context logs

2017-12-19 20:19:51,140 INFO org.apache.hadoop.http.HttpServer2: Added filter static_user_filter (class=org.apache.hadoop.http.lib.StaticUserWebFilter$StaticUserFilter) to context static

2017-12-19 20:19:54,257 INFO org.apache.hadoop.http.HttpServer2: Added filter 'org.apache.hadoop.hdfs.web.AuthFilter' (class=org.apache.hadoop.hdfs.web.AuthFilter)

2017-12-19 20:19:54,354 INFO org.apache.hadoop.http.HttpServer2: addJerseyResourcePackage: packageName=org.apache.hadoop.hdfs.server.namenode.web.resources;org.apache.hadoop.hdfs.web.resources, pathSpec=/webhdfs/v1/*

2017-12-19 20:19:58,842 INFO org.apache.hadoop.http.HttpServer2: Jetty bound to port 50070

2017-12-19 20:19:58,842 INFO org.mortbay.log: jetty-6.1.x

2017-12-19 20:20:14,556 INFO org.mortbay.log: Started HttpServer2$SelectChannelConnectorWithSafeStartup@0.0.0.0:50070

2017-12-19 20:20:25,244 WARN org.apache.hadoop.hdfs.server.namenode.FSNamesystem: Only one image storage directory (dfs.namenode.name.dir) configured. Beware of data loss due to lack of redundant storage directories!

2017-12-19 20:20:25,244 WARN org.apache.hadoop.hdfs.server.namenode.FSNamesystem: Only one namespace edits storage directory (dfs.namenode.edits.dir) configured. Beware of data loss due to lack of redundant storage directories!

2017-12-19 20:20:25,664 INFO org.apache.hadoop.hdfs.server.namenode.FSNamesystem: No KeyProvider found.

2017-12-19 20:20:25,958 INFO org.apache.hadoop.hdfs.server.namenode.FSNamesystem: fsLock is fair:true

2017-12-19 20:20:26,358 INFO org.apache.hadoop.hdfs.server.blockmanagement.DatanodeManager: dfs.block.invalidate.limit=1000

2017-12-19 20:20:26,358 INFO org.apache.hadoop.hdfs.server.blockmanagement.DatanodeManager: dfs.namenode.datanode.registration.ip-hostname-check=true

2017-12-19 20:20:26,361 INFO org.apache.hadoop.hdfs.server.blockmanagement.BlockManager: dfs.namenode.startup.delay.block.deletion.sec is set to 000:00:00:00.000

2017-12-19 20:20:26,378 INFO org.apache.hadoop.hdfs.server.blockmanagement.BlockManager: The block deletion will start around

2017 12月 19 20:20:26

2017-12-19 20:20:26,667 INFO org.apache.hadoop.util.GSet: Computing capacity for map BlocksMap

2017-12-19 20:20:26,667 INFO org.apache.hadoop.util.GSet: VM type = 64-bit

2017-12-19 20:20:26,670 INFO org.apache.hadoop.util.GSet: 2.0% max memory 966.7 MB = 19.3 MB

2017-12-19 20:20:26,670 INFO org.apache.hadoop.util.GSet: capacity = 2^21 = 2097152 entries

2017-12-19 20:20:28,017 INFO org.apache.hadoop.hdfs.server.blockmanagement.BlockManager: dfs.block.access.token.enable=false

2017-12-19 20:20:28,018 INFO org.apache.hadoop.hdfs.server.blockmanagement.BlockManager: defaultReplication = 2

2017-12-19 20:20:28,018 INFO org.apache.hadoop.hdfs.server.blockmanagement.BlockManager: maxReplication = 512

2017-12-19 20:20:28,018 INFO org.apache.hadoop.hdfs.server.blockmanagement.BlockManager: minReplication = 1

2017-12-19 20:20:28,018 INFO org.apache.hadoop.hdfs.server.blockmanagement.BlockManager: maxReplicationStreams = 2

2017-12-19 20:20:28,018 INFO org.apache.hadoop.hdfs.server.blockmanagement.BlockManager: shouldCheckForEnoughRacks = false

2017-12-19 20:20:28,018 INFO org.apache.hadoop.hdfs.server.blockmanagement.BlockManager: replicationRecheckInterval = 3000

2017-12-19 20:20:28,018 INFO org.apache.hadoop.hdfs.server.blockmanagement.BlockManager: encryptDataTransfer = false

2017-12-19 20:20:28,018 INFO org.apache.hadoop.hdfs.server.blockmanagement.BlockManager: maxNumBlocksToLog = 1000

2017-12-19 20:20:28,367 INFO org.apache.hadoop.hdfs.server.namenode.FSNamesystem: fsOwner = hadoop (auth:SIMPLE)

2017-12-19 20:20:28,367 INFO org.apache.hadoop.hdfs.server.namenode.FSNamesystem: supergroup = supergroup

2017-12-19 20:20:28,367 INFO org.apache.hadoop.hdfs.server.namenode.FSNamesystem: isPermissionEnabled = true

2017-12-19 20:20:28,368 INFO org.apache.hadoop.hdfs.server.namenode.FSNamesystem: HA Enabled: false

2017-12-19 20:20:28,369 INFO org.apache.hadoop.hdfs.server.namenode.FSNamesystem: Append Enabled: true

2017-12-19 20:20:29,850 INFO org.apache.hadoop.util.GSet: Computing capacity for map INodeMap

2017-12-19 20:20:29,850 INFO org.apache.hadoop.util.GSet: VM type = 64-bit

2017-12-19 20:20:29,851 INFO org.apache.hadoop.util.GSet: 1.0% max memory 966.7 MB = 9.7 MB

2017-12-19 20:20:29,851 INFO org.apache.hadoop.util.GSet: capacity = 2^20 = 1048576 entries

2017-12-19 20:20:30,275 INFO org.apache.hadoop.hdfs.server.namenode.NameNode: Caching file names occuring more than 10 times

2017-12-19 20:20:30,297 INFO org.apache.hadoop.util.GSet: Computing capacity for map cachedBlocks

2017-12-19 20:20:30,297 INFO org.apache.hadoop.util.GSet: VM type = 64-bit

2017-12-19 20:20:30,298 INFO org.apache.hadoop.util.GSet: 0.25% max memory 966.7 MB = 2.4 MB

2017-12-19 20:20:30,298 INFO org.apache.hadoop.util.GSet: capacity = 2^18 = 262144 entries

2017-12-19 20:20:30,301 INFO org.apache.hadoop.hdfs.server.namenode.FSNamesystem: dfs.namenode.safemode.threshold-pct = 0.9990000128746033

2017-12-19 20:20:30,301 INFO org.apache.hadoop.hdfs.server.namenode.FSNamesystem: dfs.namenode.safemode.min.datanodes = 0

2017-12-19 20:20:30,301 INFO org.apache.hadoop.hdfs.server.namenode.FSNamesystem: dfs.namenode.safemode.extension = 30000

2017-12-19 20:20:30,303 INFO org.apache.hadoop.hdfs.server.namenode.FSNamesystem: Retry cache on namenode is enabled

2017-12-19 20:20:30,304 INFO org.apache.hadoop.hdfs.server.namenode.FSNamesystem: Retry cache will use 0.03 of total heap and retry cache entry expiry time is 600000 millis

2017-12-19 20:20:30,432 INFO org.apache.hadoop.util.GSet: Computing capacity for map NameNodeRetryCache

2017-12-19 20:20:30,432 INFO org.apache.hadoop.util.GSet: VM type = 64-bit

2017-12-19 20:20:30,433 INFO org.apache.hadoop.util.GSet: 0.029999999329447746% max memory 966.7 MB = 297.0 KB

2017-12-19 20:20:30,433 INFO org.apache.hadoop.util.GSet: capacity = 2^15 = 32768 entries

2017-12-19 20:20:30,477 INFO org.apache.hadoop.hdfs.server.namenode.NNConf: ACLs enabled? false

2017-12-19 20:20:30,477 INFO org.apache.hadoop.hdfs.server.namenode.NNConf: XAttrs enabled? true

2017-12-19 20:20:30,477 INFO org.apache.hadoop.hdfs.server.namenode.NNConf: Maximum size of an xattr: 16384

2017-12-19 20:20:30,738 INFO org.apache.hadoop.hdfs.server.common.Storage: Lock on /opt/hadoop-2.6.0/dfs/name/in_use.lock acquired by nodename 9413@master

2017-12-19 20:20:31,114 INFO org.apache.hadoop.hdfs.server.namenode.FileJournalManager: Recovering unfinalized segments in /opt/hadoop-2.6.0/dfs/name/current

2017-12-19 20:20:32,519 INFO org.apache.hadoop.hdfs.server.namenode.FileJournalManager: Finalizing edits file /opt/hadoop-2.6.0/dfs/name/current/edits_inprogress_0000000000000000173 -> /opt/hadoop-2.6.0/dfs/name/current/edits_0000000000000000173-0000000000000000221

2017-12-19 20:20:32,930 INFO org.apache.hadoop.hdfs.server.namenode.FSImageFormatPBINode: Loading 32 INodes.

2017-12-19 20:20:33,158 INFO org.apache.hadoop.hdfs.server.namenode.FSImageFormatProtobuf: Loaded FSImage in 0 seconds.

2017-12-19 20:20:33,158 INFO org.apache.hadoop.hdfs.server.namenode.FSImage: Loaded image for txid 172 from /opt/hadoop-2.6.0/dfs/name/current/fsimage_0000000000000000172

2017-12-19 20:20:33,158 INFO org.apache.hadoop.hdfs.server.namenode.FSImage: Reading org.apache.hadoop.hdfs.server.namenode.RedundantEditLogInputStream@1bc425e7 expecting start txid #173

2017-12-19 20:20:33,158 INFO org.apache.hadoop.hdfs.server.namenode.FSImage: Start loading edits file /opt/hadoop-2.6.0/dfs/name/current/edits_0000000000000000173-0000000000000000221

发帖

发帖 与我相关

与我相关 我的任务

我的任务 分享

分享