37,742

社区成员

发帖

发帖 与我相关

与我相关 我的任务

我的任务 分享

分享

import requests

from bs4 import BeautifulSoup

import re

import json

def get_page(url):

try:

kv = {'user-agent':'Mozilla/5.0'}

r = requests.get(url,headers = kv)

r.raise_for_status()

r.encoding = r.apparent_encoding

return r.text

except:

return '错误'

def parse_page(html, return_list):

soup = BeautifulSoup(html, 'html.parser')

day_list = soup.find('ul', 't clearfix').find_all('li')

for day in day_list:

date = day.find('h1').get_text()

wea = day.find('p', 'wea').get_text()

if day.find('p', 'tem').find('span'):

hightem = day.find('p', 'tem').find('span').get_text()

else:

hightem = ''

lowtem = day.find('p', 'tem').find('i').get_text()

#win = re.search('(?<= title=").*?(?=")', str(day.find('p','win').find('em'))).group()

win = re.findall('(?<= title=").*?(?=")', str(day.find('p','win').find('em')))

wind = '-'.join(win)

level = day.find('p', 'win').find('i').get_text()

return_list.append([date, wea, lowtem, hightem, wind, level])

#return return_list

def print_res(return_list):

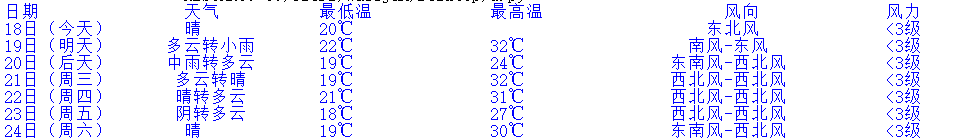

tplt = '{0:<10}\t{1:^10}\t{2:^10}\t{3:{6}^10}\t{4:{6}^10}\t{5:{6}^5}'

print(tplt.format('日期', '天气', '最低温', '最高温', '风向', '风力',chr(12288)))

for i in return_list:

print(tplt.format(i[0], i[1],i[2],i[3],i[4],i[5],chr(12288)))

def main():

url = 'http://www.weather.com.cn/weather/101110101.shtml'

html = get_page(url)

wea_list = []

parse_page(html, wea_list)

print_res(wea_list)

if __name__ == '__main__':

main()

import requests

import bs4 as bs

from prettytable import PrettyTable

import json

#导入读配置文件模块

import os

import configparser

#---------------------------------------------------------

#读配置文件

#---------------------------------------------------------

# 项目路径

rootDir = os.path.split(os.path.realpath(__file__))[0]

# 配置文件路径

configFilePath = os.path.join(rootDir, 'SpWeather.ini')

def get_config_values(section, option):

"""

根据传入的section获取对应的value

:param section: ini配置文件中用[]标识的内容

:return:

"""

WechapGroup = configparser.ConfigParser()

WechapGroup.read(configFilePath)

# return config.items(section=section)

return WechapGroup.get(section=section, option=option)

#---------------------------------------------------------

#获取配置文件中【城市】信息

TheCiy = get_config_values('CityAndDir', 'TheCity')

#获取配置文件中【保存目录】信息

OutDir = get_config_values('CityAndDir', 'OutDir')

#********************************************************************

url = "http://www.weather.com.cn/weather/101110101.shtml"

header = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/76.0.3809.87 Safari/537.36"

}

weathers = ["日期", "天气", "最低温", "最高温", "风向", "风力"]

table = PrettyTable(weathers)

json_data = []

res = requests.get(url, header)

if res.status_code == 200:

soup = bs.BeautifulSoup(res.content.decode("utf-8"), "lxml")

for el in soup.select(".c7d ul li.skyid"):

row = []

row.append(el.select_one("h1").get_text())

row.append(el.select_one(".wea").get_text())

row.append(el.select_one(".tem i").get_text())

high = el.select_one(".tem span")

row.append(high.get_text() if high else "")

row.append("-".join([win.get("title") for win in el.select(".win span")]))

row.append(el.select_one(".win i").get_text())

table.add_row(row)

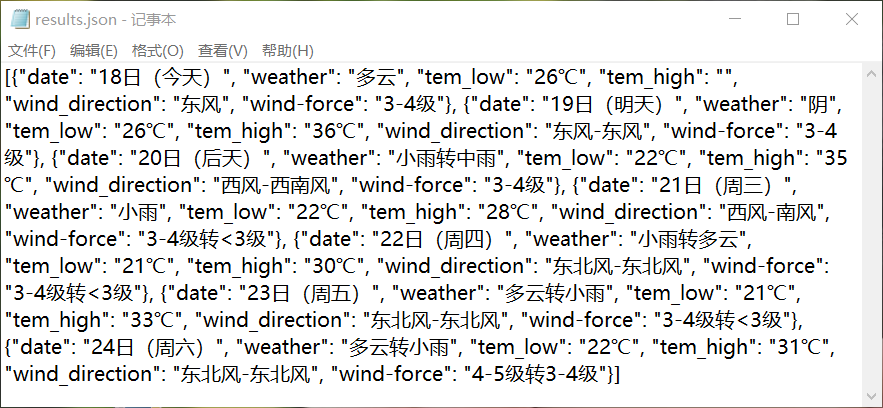

data = {}

data['date'] = el.select_one("h1").get_text()

data['weather'] = el.select_one(".wea").get_text()

data['tem_low'] = el.select_one(".tem i").get_text()

data['tem_high'] = high.get_text() if high else ""

data['wind_direction'] = "-".join([win.get("title") for win in el.select(".win span")])

data['wind-force'] = el.select_one(".win i").get_text()

json_data.append(data)

_json = json.dumps(json_data, ensure_ascii=False)

print(_json)

with open(OutDir, 'w') as result_file:

json.dump(json_data, result_file, ensure_ascii=False)