感谢各位的帮助,不管有没有解决问题....

使用版本:1.7.0

大体业务:一个Agent(a1)负责监听nc,并将接收到的消息送往其它两个Agent(a2和a3),这两个Agent(监听的)使用的是虚拟机

预期结果:a1把监听到的数据发到hadoop102(a2负责接收字母)/hadoop103(a3负责接收数字)

当前结果:两个负责接收的没有显示各自的,反而有什么就发什么

flume配置文件详细

[a1] -> 监听并发送监听听到的消息

# Name

a1.sources = s1

a1.channels = c1 c2

a1.sinks = k1 k2

# Sources

a1.sources.s1.type = netcat

a1.sources.s1.bind = localhost

a1.sources.s1.port = 44444

# Channels

a1.channels.c1.type = memory

a1.channels.c1.capacity = 1000

a1.channels.c1.transactionCapacity = 100

a1.channels.c2.type = memory

a1.channels.c2.capacity = 1000

a1.channels.c2.transactionCapacity = 100

# Channel Interceptors

a1.sources.r1.interceptors = i1

a1.sources.r1.interceptors.i1.type = dell.flume.test.test1$Builder

# Channel Selector

a1.sources.r1.selector.type = multiplexing

a1.sources.r1.selector.header = type

a1.sources.r1.selector.mapping.letter = c1

a1.sources.r1.selector.mapping.number = c2

# Sinks

a1.sinks.k1.type = avro

a1.sinks.k1.hostname = hadoop102

a1.sinks.k1.port = 4142

a1.sinks.k2.type = avro

a1.sinks.k2.hostname = hadoop103

a1.sinks.k2.port = 4141

# Bind

a1.sources.s1.channels = c1 c2

a1.sinks.k1.channel = c1

a1.sinks.k2.channel = c2

[a2] -> 接收字母

# Name

a2.sources = s1

a2.channels = c1

a2.sinks = k1

# Sources

a2.sources.s1.type = avro

a2.sources.s1.bind = hadoop102

a2.sources.s1.port = 4142

# Channels

a2.channels.c1.type = memory

a2.channels.c1.capacity = 1000

a2.channels.c1.transactionCapacity = 100

# Sinks

a2.sinks.k1.type = logger

# Bind

a2.sources.s1.channels = c1

a2.sinks.k1.channel = c1

[a3] -> 接收数字

# Name

a3.sources = s1

a3.channels = c1

a3.sinks = k1

# Sources

a3.sources.s1.type = avro

a3.sources.s1.bind = hadoop103

a3.sources.s1.port = 4141

# Channels

a3.channels.c1.type = memory

a3.channels.c1.capacity = 1000

a3.channels.c1.transactionCapacity = 100

# Sinks

a3.sinks.k1.type = logger

# Bind

a3.sources.s1.channels = c1

a3.sinks.k1.channel = c1

拦截器代码一览

package dell.flume.test;

import java.util.List;

import org.apache.flume.Context;

import org.apache.flume.Event;

import org.apache.flume.interceptor.Interceptor;

public class test1 implements Interceptor

{

public void initialize()

{

}

public Event intercept(Event event)

{

byte[] body = event.getBody();

if (body[0] < 'z' && body[0] > 'a')

{

event.getHeaders().put("type", "letter");

} else if (body[0] > '0' && body[0] < '9')

event.getHeaders().put("type", "number");

return event;

}

public List<Event> intercept(List<Event> events)

{

for (Event event : events) {

intercept(event);

}

return events;

}

public void close()

{

}

public static class Builder implements Interceptor.Builder

{

public Interceptor build()

{

return new test1();

}

public void configure(Context context)

{

}

}

}

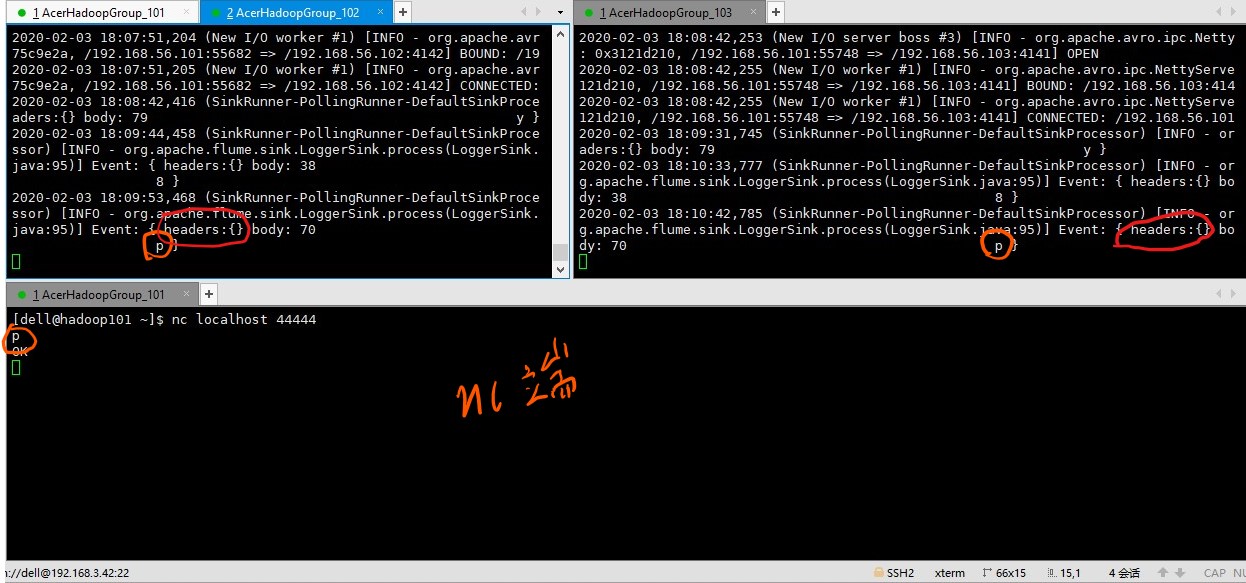

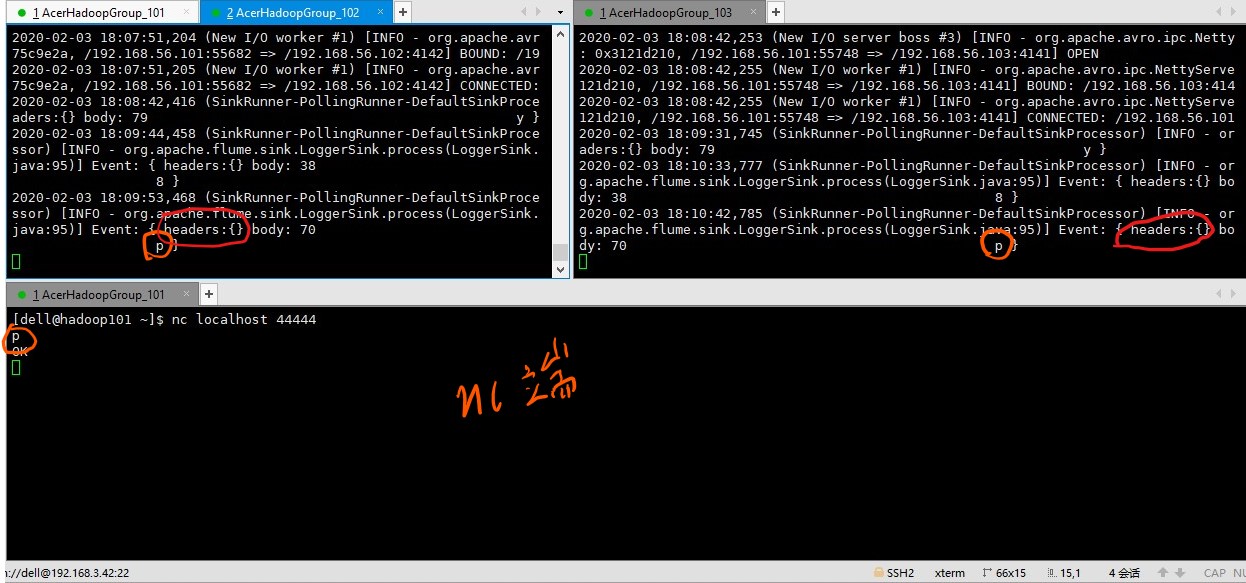

运行时的截图

为什么我的Headers里什么都没有?我明明已经把我用Maven生成的Jar放到了flume/lib目录下啊

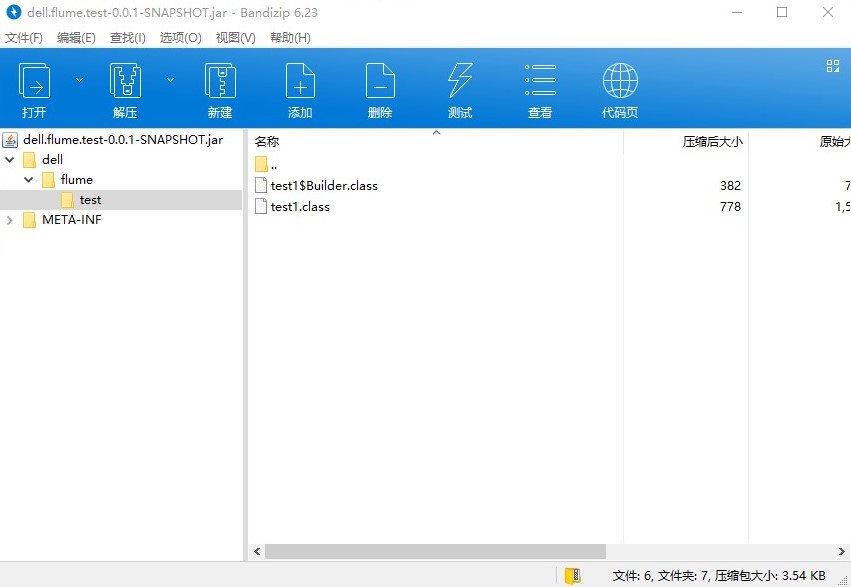

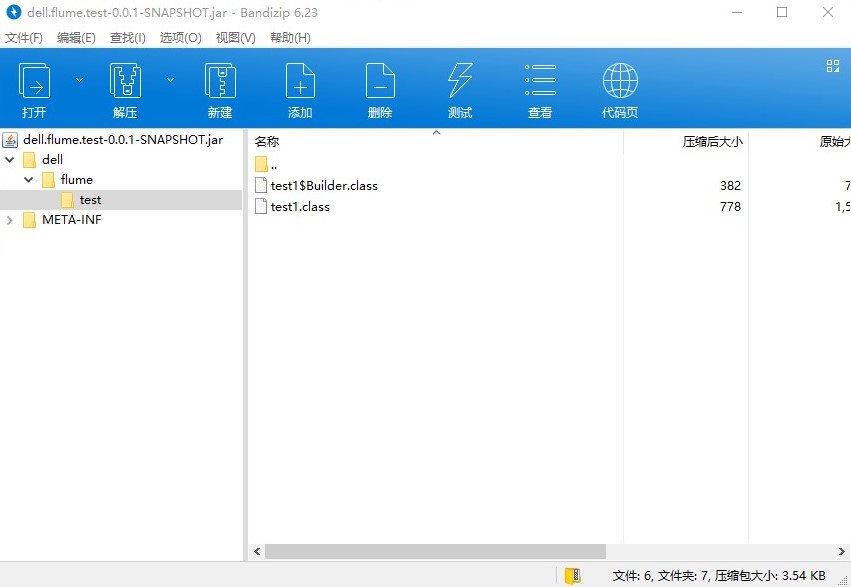

以下是我所生成的Jar文件截图

以下是我引入的POM包

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>test.flume</groupId>

<artifactId>dell.flume.test</artifactId>

<version>0.0.1-SNAPSHOT</version>

<dependencies>

<dependency>

<groupId>org.apache.flume</groupId>

<artifactId>flume-ng-core</artifactId>

<version>1.7.0</version>

</dependency>

<dependency>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-core</artifactId>

<version>2.8.2</version>

</dependency>

<dependency>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-core</artifactId>

<version>2.8.2</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>2.7.2</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>2.7.2</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>2.7.2</version>

</dependency>

<dependency>

<groupId>jdk.tools</groupId>

<artifactId>jdk.tools</artifactId>

<version>1.8</version>

<scope>system</scope>

<systemPath>${JAVA_HOME}/lib/tools.jar</systemPath>

</dependency>

</dependencies>

</project>

版本与集群里的都是与之对应的

请问是我哪里做错了么?

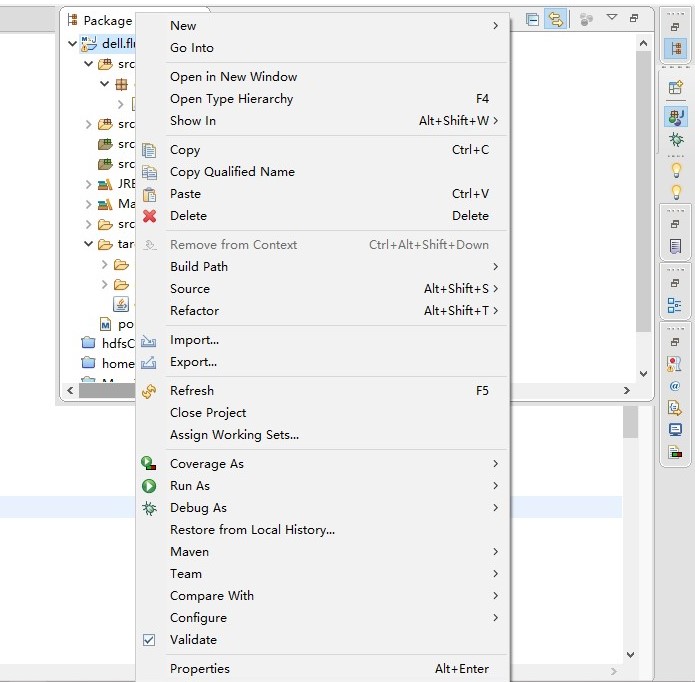

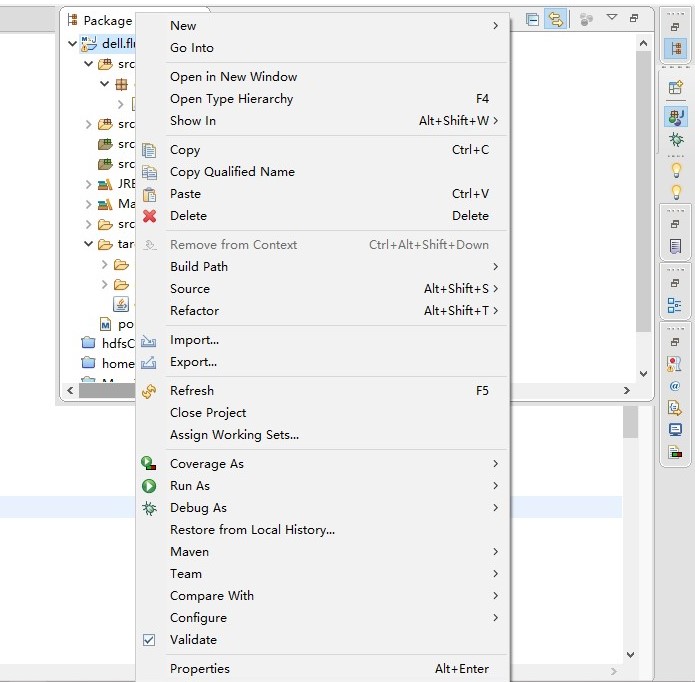

我把我生成Jar包的方式也写出来吧

首先是对项目右键

然后先运行的是Maven clean

然后运行的是Maven build

再其次是生成Jar包Maven install

我拷贝到集群的jar包就是使用的Maven install

flume的是日志文件没有什么异常出现,我还是把他贴上吧

03 ??<8c>?<9c><88> 2020 18:22:26,861 INFO [agent-shutdown-hook] (org.apache.flume.instrumentation.MonitoredCounterGroup.stop:177) - Shutdown Metric for type: SINK, name: k1. sink.event.drain.attempt == 3

03 ??<8c>?<9c><88> 2020 18:22:26,862 INFO [agent-shutdown-hook] (org.apache.flume.instrumentation.MonitoredCounterGroup.stop:177) - Shutdown Metric for type: SINK, name: k1. sink.event.drain.sucess == 3

03 ??<8c>?<9c><88> 2020 18:22:26,862 INFO [agent-shutdown-hook] (org.apache.flume.sink.AbstractRpcSink.stop:320) - Rpc sink k1 stopped. Metrics: SINK:k1{sink.batch.underflow=3, sink.connection.failed.count=0, sink.connection.closed.count=1, sink.event.drain.attempt=3, sink.batch.complete=0, sink.event.drain.sucess=3, sink.connection.creation.count=1, sink.batch.empty=114}

乱码不影响,因为没有出现Java Error之类的错误

总之就这些了,预期的目标就是a1通过拦截器,将字母与数字分别打印到不同的虚拟机(Agent)上

问题比较长,但我希望描述的足够清楚,望有缘人能给出个实际有效能解决的办法,谢谢

哦对了,我尝试过使用-C 指定jar包的方式了,无济于事

发帖

发帖 与我相关

与我相关 我的任务

我的任务 分享

分享