568

社区成员

发帖

发帖 与我相关

与我相关 我的任务

我的任务 分享

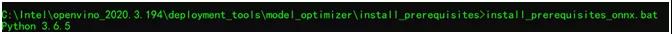

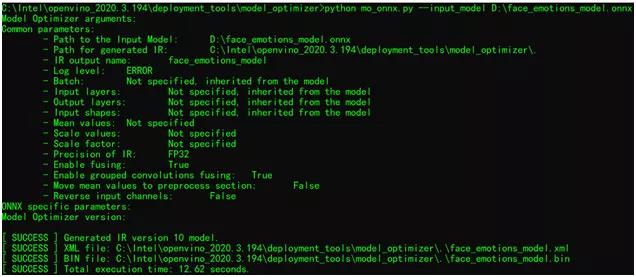

分享dummy_input = torch.randn(1, 3, 64, 64, device='cuda')

model = torch.load("./face_emotions_model.pt")

output = model(dummy_input)

model.eval()

model.cuda()

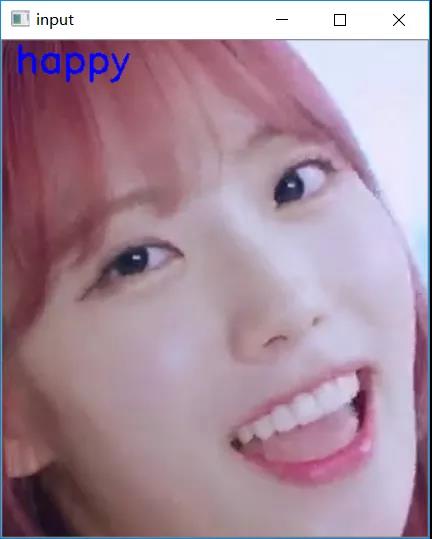

torch.onnx.export(model, dummy_input, "face_emotions_model.onnx", output_names={"output"}, verbose=True)landmark_net = cv.dnn.readNetFromONNX("landmarks_cnn.onnx")

image = cv.imread("D:/facedb/test/464.jpg")

cv.imshow("input", image)

h, w, c = image.shape

blob = cv.dnn.blobFromImage(image, 0.00392, (64, 64), (0.5, 0.5, 0.5), False) / 0.5

print(blob)

landmark_net.setInput(blob)

lm_pts = landmark_net.forward()

print(lm_pts)

for x, y in lm_pts:

print(x, y)

x1 = x * w

y1 = y * h

cv.circle(image, (np.int32(x1), np.int32(y1)), 2, (0, 0, 255), 2, 8, 0)

cv.imshow("人脸五点检测", image)

cv.imwrite("D:/landmark_det_result.png", image)

cv.waitKey(0)

cv.destroyAllWindows()

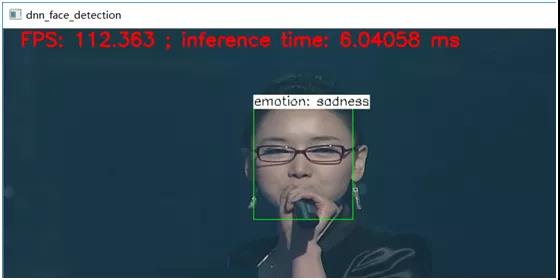

dnn::Net emtion_net = readNetFromModelOptimizer(emotion_xml, emotion_bin);

2emtion_net.setPreferableTarget(DNN_TARGET_CPU);

3emtion_net.setPreferableBackend(DNN_BACKEND_INFERENCE_ENGINE);

Rect box(x1, y1, x2 - x1, y2 - y1);

Mat roi = frame(box);

Mat face_blob = blobFromImage(roi, 0.00392, Size(64, 64), Scalar(0.5, 0.5, 0.5), false, false);

emtion_net.setInput(face_blob);

Mat probs = emtion_net.forward();

int index = 0;

float max = -1;

for (int i = 0; i < 8; i++) {

const float *scores = probs.ptr<float>(0, i, 0);

float score = scores[0];

if (max < score) {

max = score;

index = i;

}

}

rectangle(frame, box, Scalar(0, 255, 0));