102

社区成员

发帖

发帖 与我相关

与我相关 我的任务

我的任务 分享

分享设计并开发一个语音识别应用系统。

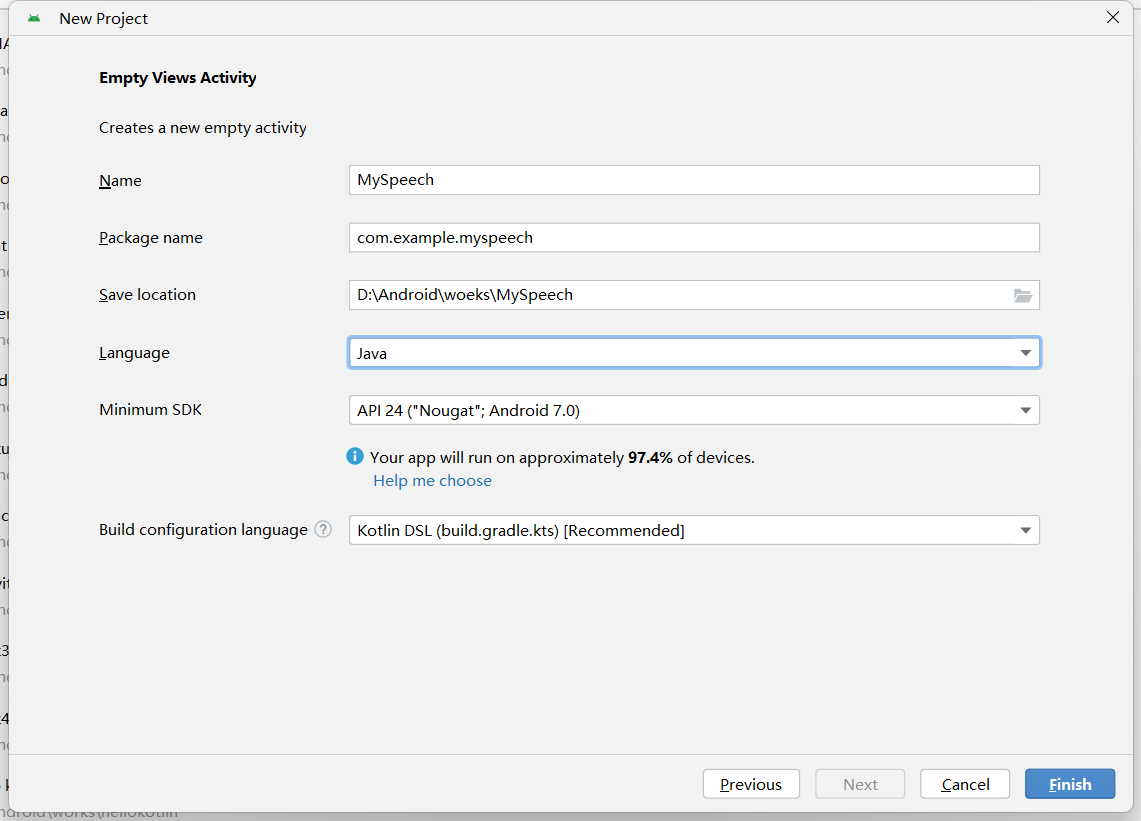

通过使用RecognizerIntent实现语音识别功能,开发一个Android语音识别系统。

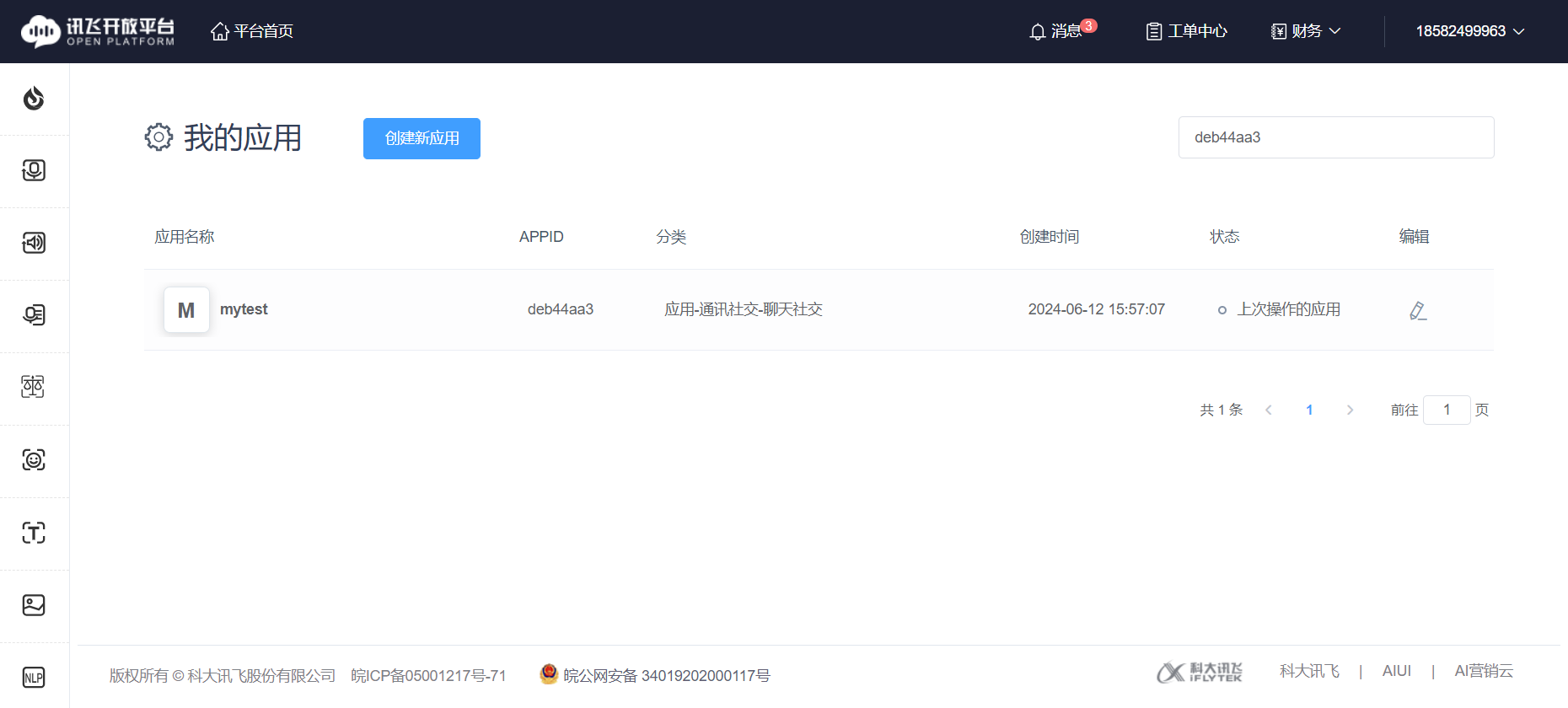

访问https://www.xfyun.cn/?jump=login进行注册,然后创建一个应用并进行相应SDK下载

完成实名认证

接着设置唤醒词

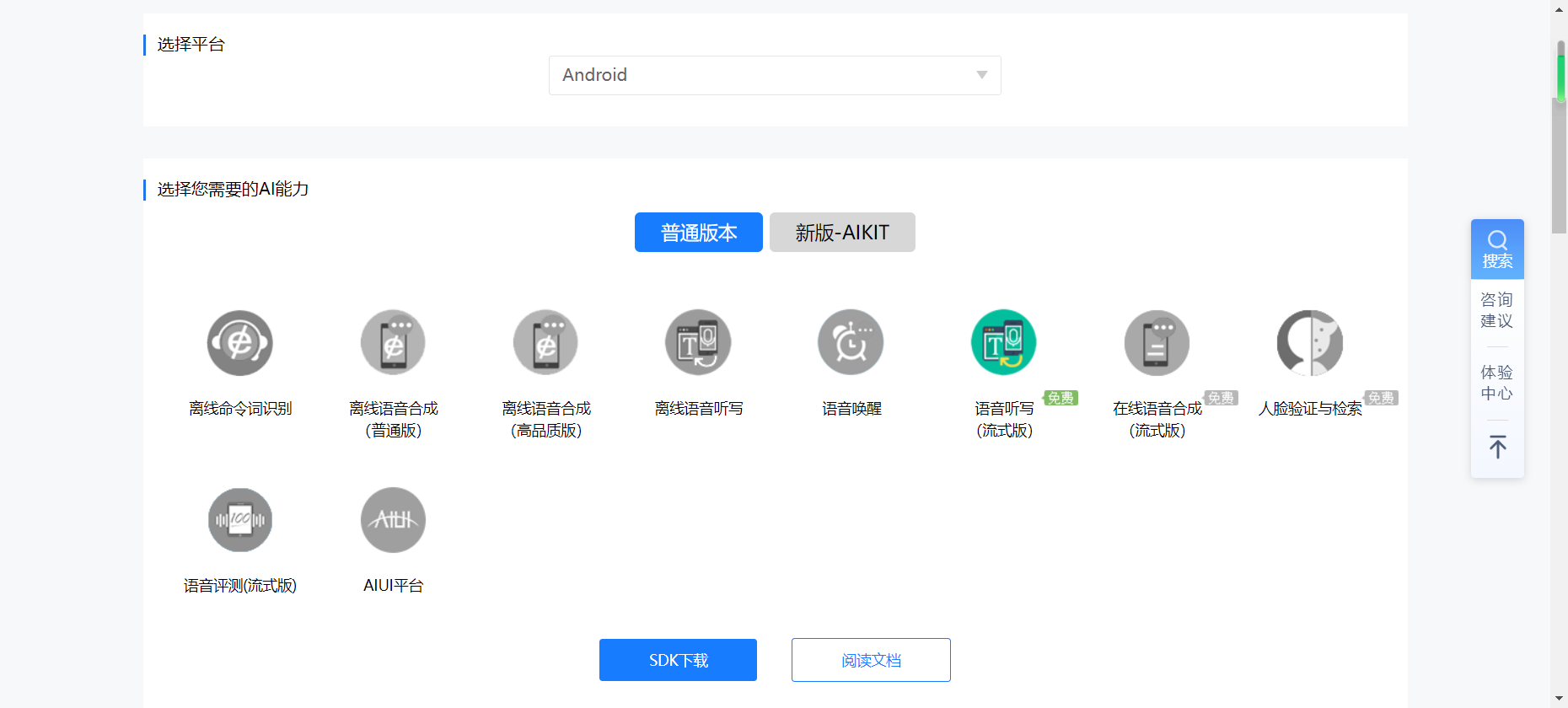

随后开始下载

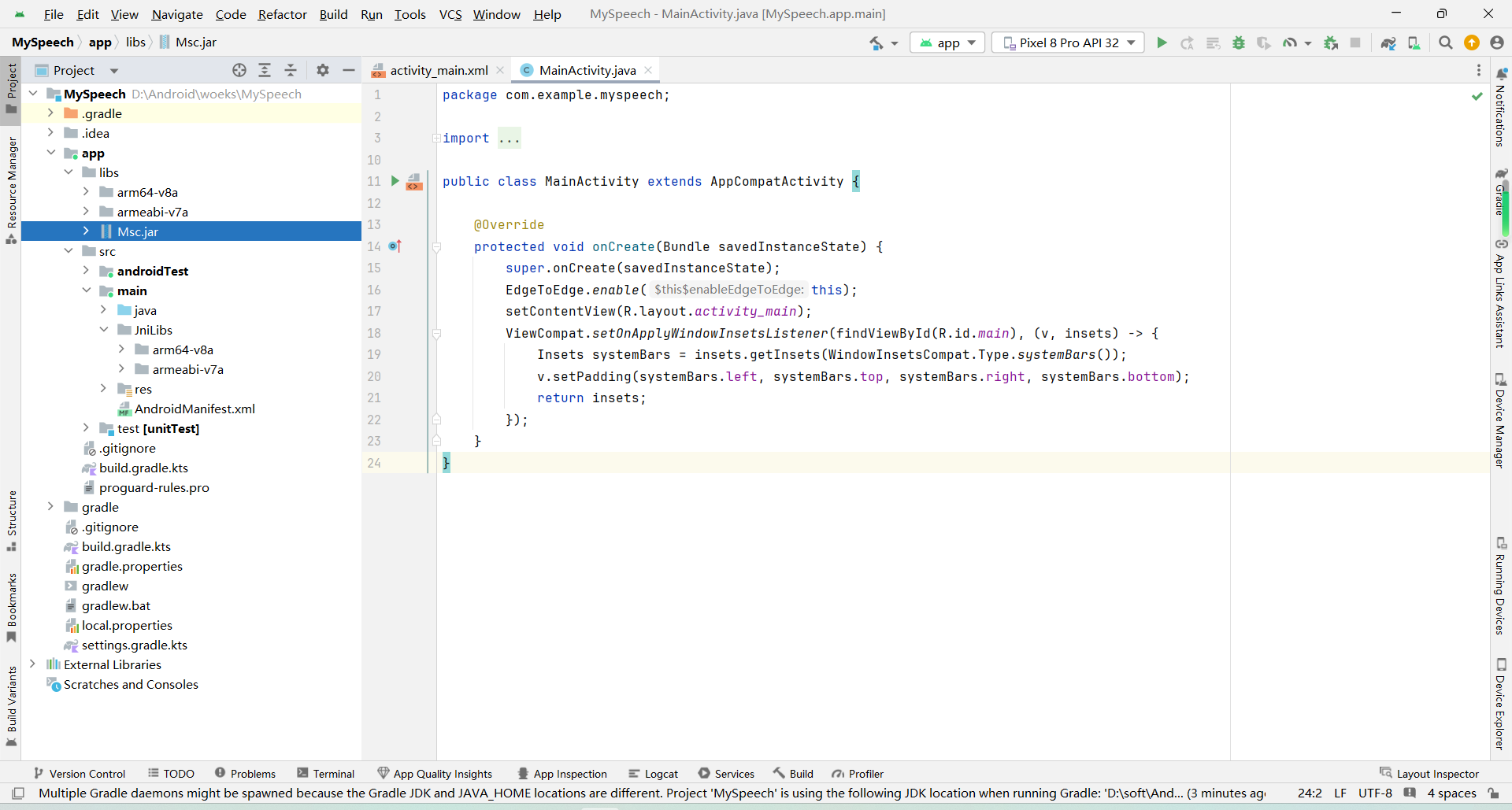

在libs目录下导入libs,并且把Msc.jar Add as library

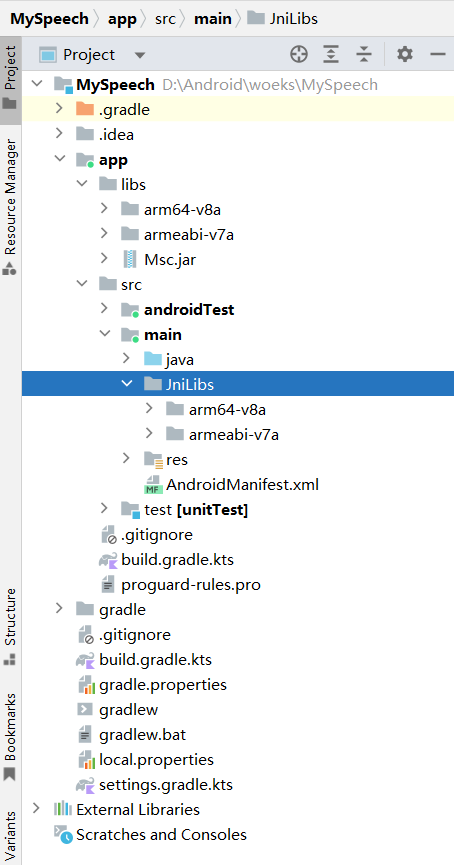

在项目“/module/src/main/”下新建JniLibs文件夹,将”/Demo/libs/“下存放so文件的文件夹复制过去

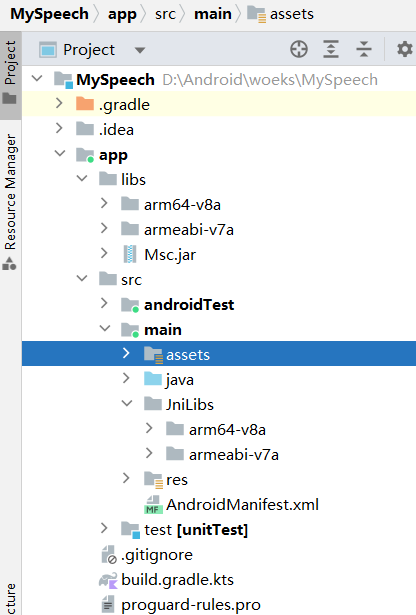

将“Demo/scr/main/assets/”下的文件复制到项目对应文件夹下

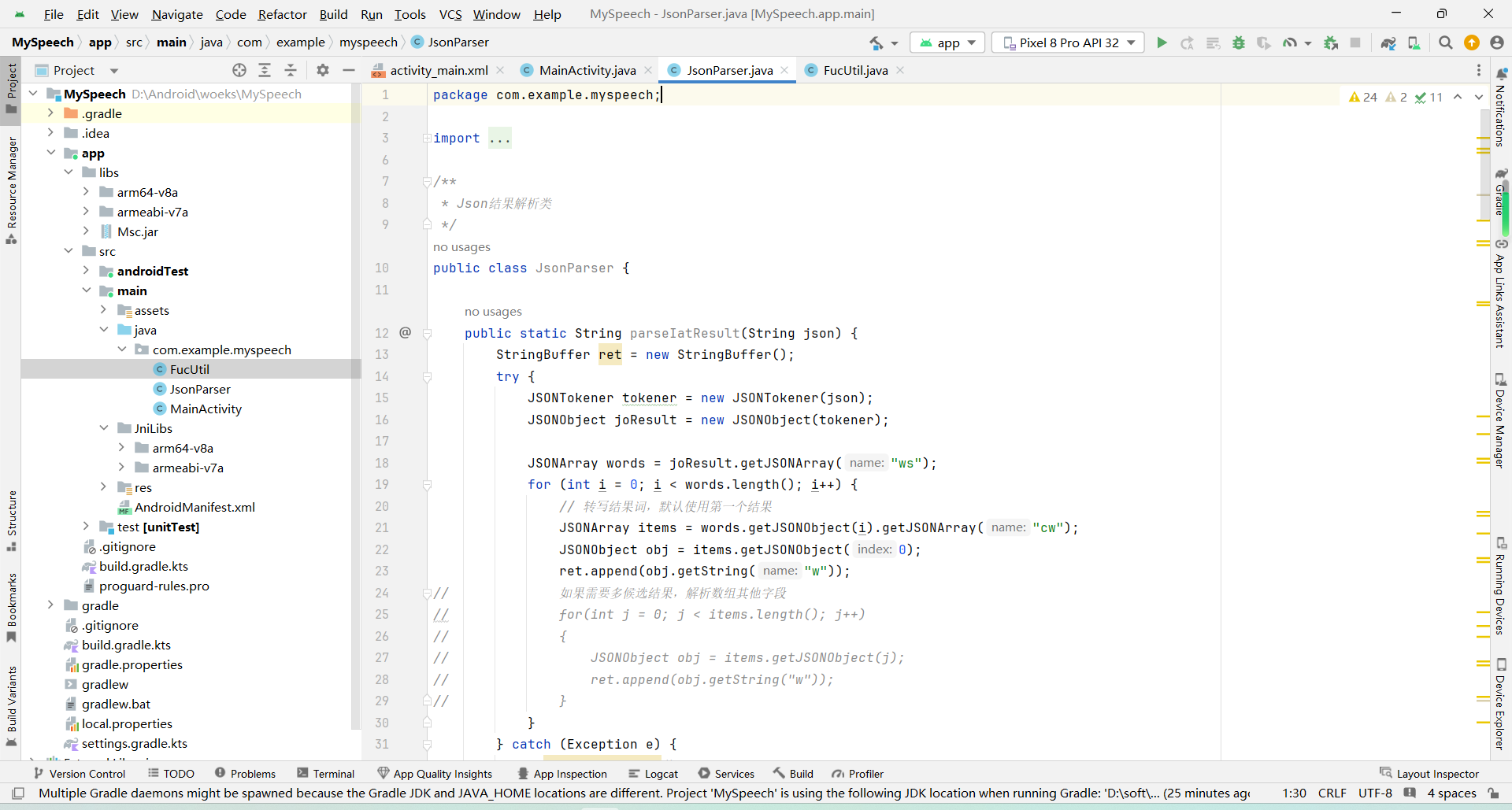

以及样例中的两个库函数

最后结果如下所示:

在AndroidManifest.xml中添加权限

<uses-permission android:name="android.permission.INTERNET" />

<uses-permission android:name="android.permission.RECORD_AUDIO" />

<uses-permission android:name="android.permission.CAMERA" />

<uses-permission android:name="android.permission.READ_PHONE_STATE" />

这部分代码用于允许应用程序访问互联网,允许应用程序录制音频,允许应用程序使用设备的摄像头,允许应用程序读取手机状态和身份信息。

最终mainfest.xml文件:

<?xml version="1.0" encoding="utf-8"?>

<manifest xmlns:android="http://schemas.android.com/apk/res/android"

xmlns:tools="http://schemas.android.com/tools">

<uses-feature

android:name="android.hardware.camera"

android:required="false" />

<application

android:allowBackup="true"

android:dataExtractionRules="@xml/data_extraction_rules"

android:fullBackupContent="@xml/backup_rules"

android:icon="@mipmap/ic_launcher"

android:label="@string/app_name"

android:roundIcon="@mipmap/ic_launcher_round"

android:supportsRtl="true"

android:theme="@style/Theme.MySpeech"

tools:targetApi="31">

<activity

android:name=".MainActivity"

android:exported="true">

<intent-filter>

<action android:name="android.intent.action.MAIN" />

<category android:name="android.intent.category.LAUNCHER" />

</intent-filter>

</activity>

</application>

<uses-permission android:name="android.permission.INTERNET" />

<uses-permission android:name="android.permission.RECORD_AUDIO" />

<uses-permission android:name="android.permission.CAMERA" />

<uses-permission android:name="android.permission.READ_PHONE_STATE" />

</manifest>

activity_main.xml

layout文件:

<?xml version="1.0" encoding="utf-8"?>

<LinearLayout xmlns:android="http://schemas.android.com/apk/res/android"

android:layout_width="match_parent"

android:layout_height="match_parent"

android:background="#519D9E"

android:gravity="center_horizontal"

android:orientation="vertical"

android:paddingLeft="10dp"

android:paddingRight="10dp">

<TextView

android:layout_width="wrap_content"

android:layout_height="wrap_content"

android:layout_centerInParent="true"

android:layout_margin="15dp"

android:text="讯飞听写示例"

android:textSize="30sp" />

<EditText

android:id="@+id/iat_text"

android:layout_width="match_parent"

android:layout_height="0dp"

android:layout_weight="1"

android:gravity="top|left"

android:hint="听写结果显示"

android:paddingBottom="10dp"

android:textColorHint="@color/white"

android:textColor="@color/white"

android:textSize="20sp" />

<LinearLayout

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:layout_marginTop="10dp"

android:layout_marginBottom="2dp"

android:gravity="center_horizontal"

android:orientation="horizontal">

<Button

android:id="@+id/iat_recognize"

android:layout_width="0dp"

android:layout_height="wrap_content"

android:layout_weight="1"

android:text="开始"

android:textSize="20sp" />

<Button

android:id="@+id/iat_stop"

android:layout_width="0dp"

android:layout_height="wrap_content"

android:layout_weight="1"

android:text="停止"

android:textSize="20sp" />

<Button

android:id="@+id/iat_cancel"

android:layout_width="0dp"

android:layout_height="wrap_content"

android:layout_weight="1"

android:text="取消"

android:textSize="20sp" />

</LinearLayout>

<Button

android:id="@+id/iat_recognize_stream"

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:text="音频流识别"

android:textSize="20sp" />

</LinearLayout>

MainActivity.java文件:

package com.example.myspeech;

import android.app.AlertDialog;

import android.os.Bundle;

import android.os.Environment;

import android.os.Handler;

import android.os.Message;

import android.util.Log;

import android.view.View;

import android.widget.Button;

import android.widget.EditText;

import android.widget.Toast;

import androidx.activity.ComponentActivity;

import com.iflytek.cloud.ErrorCode;

import com.iflytek.cloud.InitListener;

import com.iflytek.cloud.RecognizerListener;

import com.iflytek.cloud.RecognizerResult;

import com.iflytek.cloud.SpeechConstant;

import com.iflytek.cloud.SpeechError;

import com.iflytek.cloud.SpeechRecognizer;

import com.iflytek.cloud.SpeechUtility;

import com.iflytek.cloud.ui.RecognizerDialog;

import com.iflytek.cloud.ui.RecognizerDialogListener;

import org.json.JSONException;

import org.json.JSONObject;

import java.util.ArrayList;

import java.util.HashMap;

public class MainActivity extends ComponentActivity implements View.OnClickListener {

private static final String TAG = "MainActivity";

private SpeechRecognizer mIat;

private RecognizerDialog mIatDialog;

private HashMap<String, String> mIatResults = new HashMap<>();

private EditText mResultText;

private String language = "zh_cn";

private String resultType = "json";

private StringBuffer buffer = new StringBuffer();

private int handlerCode = 0x123;

private int resultCode = 0;

private AlertDialog dialog;

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

SpeechUtility.createUtility(this, SpeechConstant.APPID + "=c9d19afd");

findViewById(R.id.iat_recognize).setOnClickListener(this);

findViewById(R.id.iat_recognize_stream).setOnClickListener(this);

findViewById(R.id.iat_stop).setOnClickListener(this);

findViewById(R.id.iat_cancel).setOnClickListener(this);

mResultText = findViewById(R.id.iat_text);

mIat = SpeechRecognizer.createRecognizer(this, mInitListener);

mIatDialog = new RecognizerDialog(MainActivity.this, mInitListener);

}

@Override

public void onClick(View view) {

if (null == mIat) {

showToast("创建对象失败,请确认 libmsc.so 放置正确,且有调用 createUtility 进行初始化");

return;

}

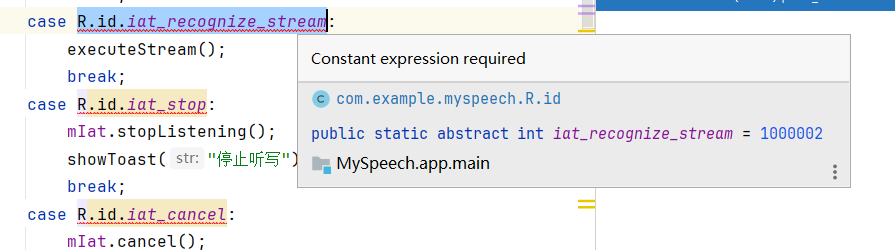

switch (view.getId()) {

case R.id.iat_recognize:

buffer.setLength(0);

mResultText.setText(null);

mIatResults.clear();

setParam();

mIatDialog.setListener(mRecognizerDialogListener);

mIatDialog.show();

showToast("开始听写");

break;

case R.id.iat_recognize_stream:

executeStream();

break;

case R.id.iat_stop:

mIat.stopListening();

showToast("停止听写");

break;

case R.id.iat_cancel:

mIat.cancel();

showToast("取消听写");

break;

}

}

private InitListener mInitListener = new InitListener() {

@Override

public void onInit(int code) {

Log.e(TAG, "SpeechRecognizer init() code = " + code);

if (code != ErrorCode.SUCCESS) {

showToast("初始化失败,错误码:" + code);

}

}

};

private RecognizerListener mRecognizerListener = new RecognizerListener() {

@Override

public void onBeginOfSpeech() {

showToast("开始说话");

}

@Override

public void onError(SpeechError error) {

showToast(error.getPlainDescription(true));

if (dialog != null) {

dialog.dismiss();

}

}

@Override

public void onEndOfSpeech() {

showToast("结束说话");

if (dialog != null) {

dialog.dismiss();

}

}

@Override

public void onResult(RecognizerResult results, boolean isLast) {

printResult(results);

if (isLast) {

Message message = Message.obtain();

message.what = handlerCode;

handler.sendMessageDelayed(message, 100);

}

}

@Override

public void onVolumeChanged(int volume, byte[] data) {

Log.e(TAG, "onVolumeChanged: " + data.length);

}

@Override

public void onEvent(int eventType, int arg1, int arg2, Bundle obj) {

}

};

private Handler handler = new Handler() {

@Override

public void handleMessage(Message msg) {

super.handleMessage(msg);

if (msg.what == handlerCode) {

executeStream();

}

}

};

private RecognizerDialogListener mRecognizerDialogListener = new RecognizerDialogListener() {

@Override

public void onResult(RecognizerResult results, boolean isLast) {

printResult(results);

}

@Override

public void onError(SpeechError error) {

showToast(error.getPlainDescription(true));

}

};

private void printResult(RecognizerResult results) {

String text = JsonParser.parseIatResult(results.getResultString());

String sn = null;

try {

JSONObject resultJson = new JSONObject(results.getResultString());

sn = resultJson.optString("sn");

} catch (JSONException e) {

e.printStackTrace();

}

mIatResults.put(sn, text);

StringBuffer resultBuffer = new StringBuffer();

for (String key : mIatResults.keySet()) {

resultBuffer.append(mIatResults.get(key));

}

mResultText.setText(resultBuffer.toString());

mResultText.setSelection(mResultText.length());

}

public void setParam() {

mIat.setParameter(SpeechConstant.PARAMS, null);

mIat.setParameter(SpeechConstant.ENGINE_TYPE, SpeechConstant.TYPE_CLOUD);

mIat.setParameter(SpeechConstant.RESULT_TYPE, resultType);

mIat.setParameter(SpeechConstant.LANGUAGE, language);

mIat.setParameter(SpeechConstant.ACCENT, "mandarin");

mIat.setParameter(SpeechConstant.VAD_BOS, "5000");

mIat.setParameter(SpeechConstant.VAD_EOS, "1800");

mIat.setParameter(SpeechConstant.ASR_PTT, "1");

mIat.setParameter(SpeechConstant.AUDIO_FORMAT, "wav");

mIat.setParameter(SpeechConstant.ASR_AUDIO_PATH, Environment.getExternalStorageDirectory() + "/msc/helloword.wav");

}

private void executeStream() {

buffer.setLength(0);

mResultText.setText(null);

mIatResults.clear();

setParam();

mIat.setParameter(SpeechConstant.AUDIO_SOURCE, "-1");

mIat.setParameter(SpeechConstant.LANGUAGE, language);

resultCode = mIat.startListening(mRecognizerListener);

if (resultCode != ErrorCode.SUCCESS) {

showToast("识别失败,错误码:" + resultCode);

} else {

byte[] audioData = FucUtil.readAudioFile(MainActivity.this, "iattest.wav");

if (audioData != null) {

showToast("开始音频流识别");

ArrayList<byte[]> bytes = FucUtil.splitBuffer(audioData, audioData.length, audioData.length / 3);

for (int i = 0; i < bytes.size(); i++) {

mIat.writeAudio(bytes.get(i), 0, bytes.get(i).length);

try {

Thread.sleep(1000);

} catch (Exception e) {

e.printStackTrace();

}

}

mIat.stopListening();

} else {

mIat.cancel();

showToast("读取音频流失败");

}

}

}

@Override

protected void onResume() {

super.onResume();

}

@Override

protected void onPause() {

super.onPause();

}

private void showToast(final String str) {

Toast.makeText(this, str, Toast.LENGTH_SHORT).show();

}

}

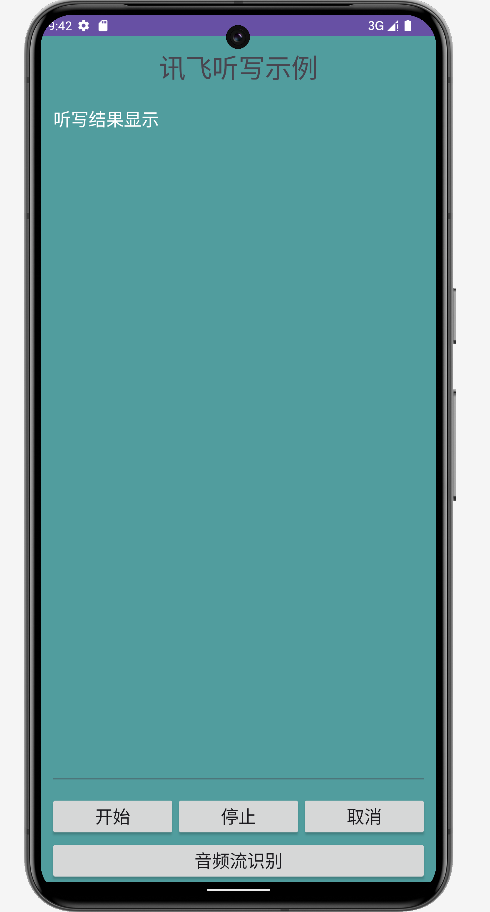

运行结果如下:

通过本次实验,我深入了解了语音识别技术在Android平台上的应用。使用RecognizerIntent实现语音识别功能,不仅让我体验到了编程的乐趣,也让我认识到了技术在实际问题解决中的强大。在开发过程中,我遇到了不少挑战,但通过不断调试和优化,最终成功实现了系统。这次实验让我更加坚信,只有不断学习和实践,才能不断进步。