def build_model(self):

# input : [batchsize, patch_sioze, patch_sioze, channel]

self.X = tf.placeholder(tf.float32, [None, self.patch_sioze, self.patch_sioze, self.input_c_dim],

name='residual_image')

self.X_ = tf.placeholder(tf.float32, [None, self.patch_sioze, self.patch_sioze, self.input_c_dim],

name='lower_image')

# layer 1

with tf.variable_scope('conv1'):

layer_1_output = self.layer(self.X_, [3, 3, self.input_c_dim, 64], useBN=False)

# layer 2 to 16

with tf.variable_scope('conv2'):

layer_2_output = self.layer(layer_1_output, [3, 3, 64, 64])

with tf.variable_scope('conv3'):

layer_3_output = self.layer(layer_2_output, [3, 3, 64, 64])

with tf.variable_scope('conv4'):

layer_4_output = self.layer(layer_3_output, [3, 3, 64, 64])

with tf.variable_scope('conv5'):

layer_5_output = self.layer(layer_4_output, [3, 3, 64, 64])

with tf.variable_scope('conv6'):

layer_6_output = self.layer(layer_5_output, [3, 3, 64, 64])

with tf.variable_scope('conv7'):

layer_7_output = self.layer(layer_6_output, [3, 3, 64, 64])

with tf.variable_scope('conv8'):

layer_8_output = self.layer(layer_7_output, [3, 3, 64, 64])

with tf.variable_scope('conv9'):

layer_9_output = self.layer(layer_8_output, [3, 3, 64, 64])

with tf.variable_scope('conv10'):

layer_10_output = self.layer(layer_9_output, [3, 3, 64, 64])

with tf.variable_scope('conv11'):

layer_11_output = self.layer(layer_10_output, [3, 3, 64, 64])

with tf.variable_scope('conv12'):

layer_12_output = self.layer(layer_11_output, [3, 3, 64, 64])

with tf.variable_scope('conv13'):

layer_13_output = self.layer(layer_12_output, [3, 3, 64, 64])

with tf.variable_scope('conv14'):

layer_14_output = self.layer(layer_13_output, [3, 3, 64, 64])

with tf.variable_scope('conv15'):

layer_15_output = self.layer(layer_14_output, [3, 3, 64, 64])

with tf.variable_scope('conv16'):

layer_16_output = self.layer(layer_15_output, [3, 3, 64, 64])

with tf.variable_scope('conv17'):

layer_17_output = self.layer(layer_16_output, [3, 3, 64, 64])

with tf.variable_scope('conv18'):

layer_18_output = self.layer(layer_17_output, [3, 3, 64, 64])

with tf.variable_scope('conv19'):

layer_19_output = self.layer(layer_18_output, [3, 3, 64, 64])

# layer 20

with tf.variable_scope('conv20'):

self.Y = self.layernr(layer_19_output, [3, 3, 64, self.output_c_dim])

# L2 loss

self.Y_ = self.X + self.X_ #residual_image + lower_image

self.loss = (1.0 / self.batch_size) * tf.nn.l2_loss(self.Y_ - self.Y)

optimizer = tf.train.AdamOptimizer(self.lr, name='AdamOptimizer')

self.train_step = optimizer.minimize(self.loss)

tf.summary.scalar('loss', self.loss)

# create this init op after all variables specified

self.init = tf.global_variables_initializer()

self.saver = tf.train.Saver()

print("

- Initialize model successfully..."

- )

def conv_layer(self, inputdata, weightshape, b_init, stridemode):

# weights

W = tf.get_variable('weights', weightshape,

initializer=tf.constant_initializer(get_conv_weights(weightshape, self.sess)))

b = tf.get_variable('biases', [1, weightshape[-1]], initializer=tf.constant_initializer(b_init))

# convolutional layer

return tf.add(tf.nn.conv2d(inputdata, W, strides=stridemode, padding="SAME"), b) # SAME with zero padding

def bn_layer(self, logits, output_dim, b_init=0.0):

alpha = tf.get_variable('bn_alpha', [1, output_dim], initializer=

tf.constant_initializer(get_bn_weights([1, output_dim], self.clip_b, self.sess)))

beta = tf.get_variable('bn_beta', [1, output_dim], initializer=

tf.constant_initializer(b_init))

return batch_normalization(logits, alpha, beta, isCovNet=True)

def layer(self, inputdata, filter_shape, b_init=0.0, stridemode=[1, 1, 1, 1], useBN=False):

logits = self.conv_layer(inputdata, filter_shape, b_init, stridemode)

if useBN:

output = tf.nn.relu(self.bn_layer(logits, filter_shape[-1]))

else:

output = tf.nn.relu(logits)

return output

def layernr(self, inputdata, filter_shape, b_init=0.0, stridemode=[1, 1, 1, 1], ):

output = self.conv_layer(inputdata, filter_shape, b_init, stridemode)

return output

def train(self):

# init the variables

self.sess.run(self.init)

# get data

test_files = glob('./data/test/{}/*.bmp'.format(self.testset))

test_data = load_images(test_files) # list of array of different size, 4-D, pixel value range is 0-255

data = load_data(filepath='./data/img_clean_pats.npy')

numBatch = int(data.shape[0] / self.batch_size)

# print(" - Data shape = " + str

- (data.shape))

writer = tf.summary.FileWriter('./logs', self.sess.graph)

merged = tf.summary.merge_all()

iter_num = 0

print(" - Start training : "

- )

start_time = time.time()

for epoch in xrange(self.epoch):

for batch_id in xrange(numBatch):

batch_images = data[batch_id * self.batch_size:(batch_id + 1) * self.batch_size, :, :, :]

train_images = np.zeros((128, 50, 50), dtype="uint8")

batch_im = np.zeros((128, 50, 50), dtype="uint8")

#batch_images = np.array(batch_images / 255.0, dtype=np.float32) #normalize the data to 0-1

for i in range(self.batch_size):

im = batch_images[i,:, :, :]

im = Image.fromarray(im.astype(np.uint8))

newsize = (int(im.size[0] / self.scale), int(im.size[1] / self.scale))

im1 = im.resize([BATCH_SIZE, IMG_SIZE[1], IMG_SIZE[0], 1], resample=PIL.Image.BICUBIC)

newsize1 = (int(im.size[0]), int(im.size[1]))

im1 = im1.resize([BATCH_SIZE, IMG_SIZE[1], IMG_SIZE[0], 1], resample=PIL.Image.BICUBIC)

im1 = np.array(im1, dtype="uint8")

im1 = im1[:, :,0]

im = im[ :, :,0]

train_images[ i, :, :] = im1[ :, :]

batch_im[ i, :, :] = im[ :, :]

#print(im1.shape)

train_images = np.reshape(train_images,(128,im1.shape[0], im1.shape[1], 1))

batch_im = np.reshape(batch_im,(128,im1.shape[0], im.shape[1], 1))

train_images = np.array(train_images / 255.0, dtype=np.float32)

batch_im = np.array(batch_im / 255.0, dtype=np.float32) #normalize the data to 0-1

#train_images = add_residual(batch_images, sigma1, self.sess)

_, loss, summary = self.sess.run([self.train_step, self.loss, merged], \

feed_dict={self.X: train_images, self.X_: batch_im})

print("Epoch: [%2d] [%4d/%4d] time: %4.4f, loss: %.6f" \

% (epoch + 1, batch_id + 1, numBatch,

time.time() - start_time, loss))

iter_num += 1

writer.add_summary(summary, iter_num)

if np.mod(epoch, self.eval_every_epoch) == 0:

self.save(iter_num)

# save the model

if np.mod(iter_num, self.save_every_epoch) == 0:

self.evaluate(epoch, iter_num, test_data) # test_data value range is 0-255

print(" - Finish training."

- )

def save(self, iter_num):

model_name = "VDSR.model"

model_dir = "%s_%s_%s" % (self.trainset,

self.batch_size, self.patch_sioze)

checkpoint_dir = os.path.join(self.ckpt_dir, model_dir)

if not os.path.exists(checkpoint_dir):

os.makedirs(checkpoint_dir)

print(" - Saving model..."

- )

self.saver.save(self.sess,

os.path.join(checkpoint_dir, model_name),

global_step=iter_num)

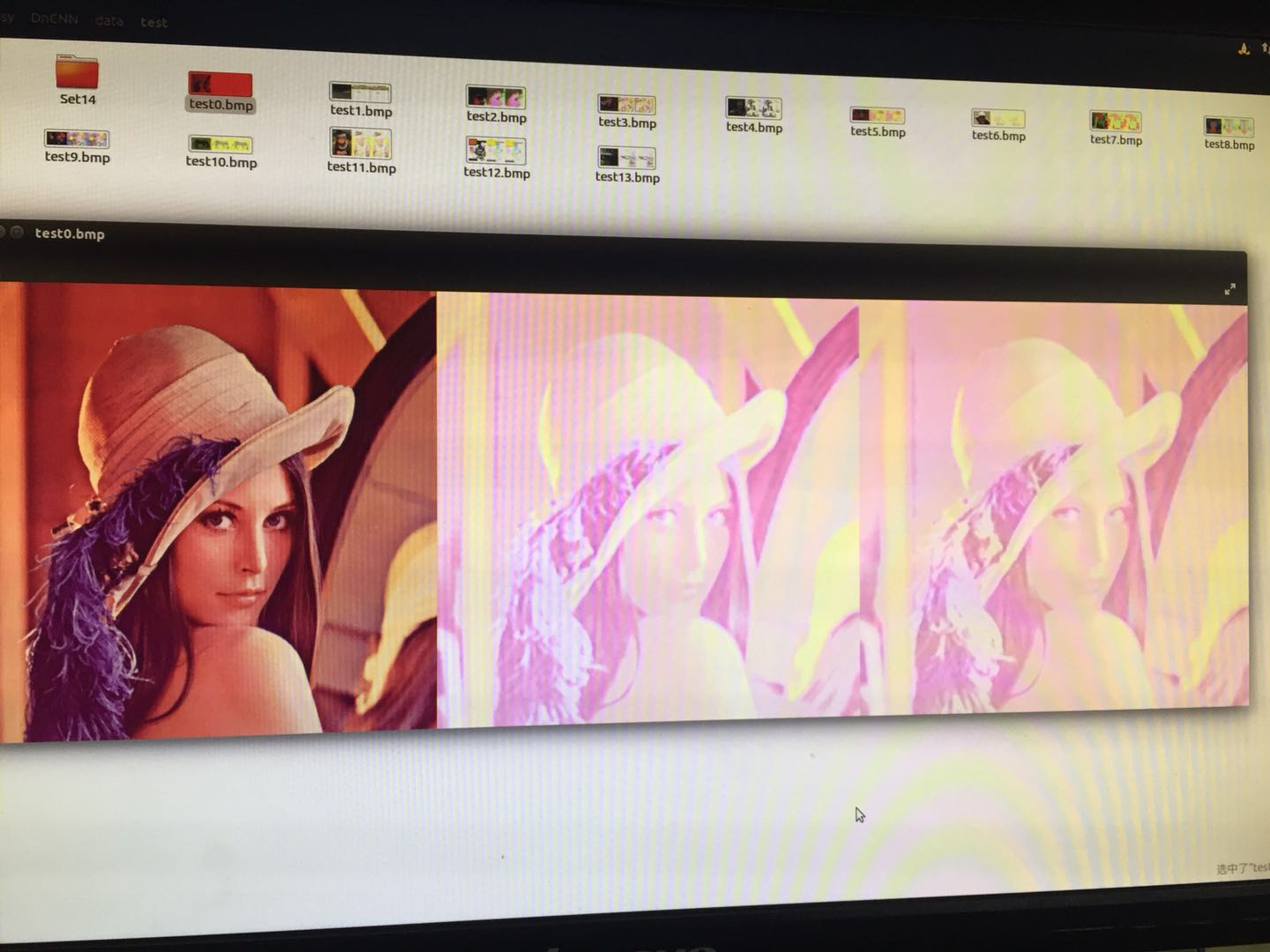

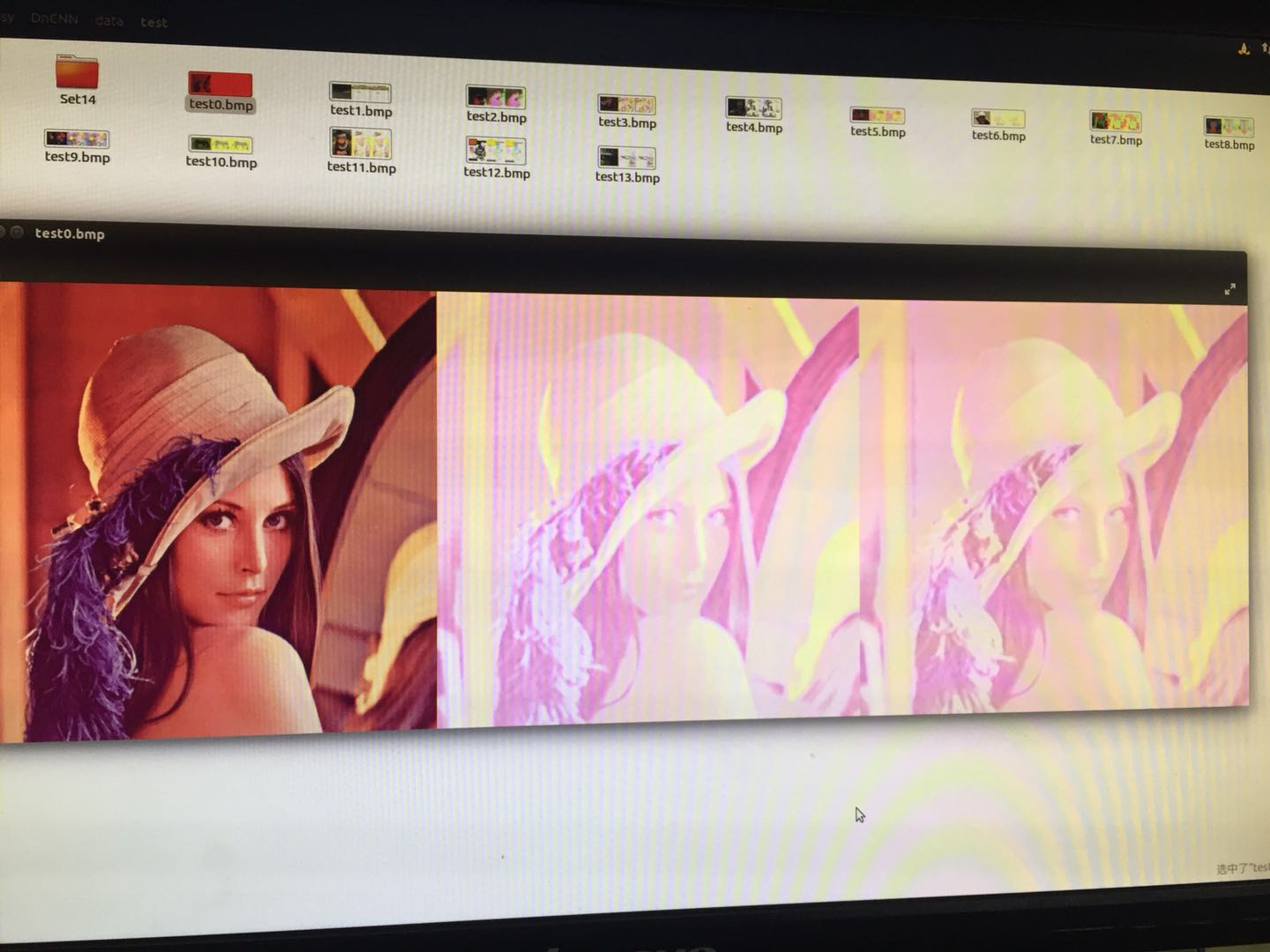

不知道是哪里出了错,导致图像在回复的时候出现了错误,求大神指点。

发帖

发帖 与我相关

与我相关 我的任务

我的任务 分享

分享