1,274

社区成员

发帖

发帖 与我相关

与我相关 我的任务

我的任务 分享

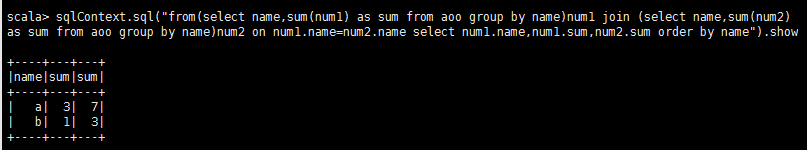

分享 我再做测试数据的时候写成了b,1,3,见谅见谅

我再做测试数据的时候写成了b,1,3,见谅见谅

import org.apache.spark.rdd.RDD

import org.apache.spark.{SparkConf, SparkContext}

object RDDTest {

def main(args: Array[String]): Unit = {

val conf = new SparkConf().setAppName("RDDTest").setMaster("local- "

- )

val sc = new SparkContext(conf);

val input = sc.parallelize(List(

List("a", 1, 3),

List("a", 2, 4),

List("b", 1, 1)), 3)

// 根据数据数据类型,转化为(key, (value1, value2))形式的键值对

val maped: RDD[(String, (Int, Int))] = input.map {

x => {

val key = x(0).toString;

val v1 = x(1).toString.toInt;

val v2 = x(2).toString.toInt;

(key, (v1, v2))

}

}

// 根据key进行合并, value1与value1合并, value2与value2合并

val reduced: RDD[(String, (Int, Int))] = maped.reduceByKey(

(lastValue, thisValue) => {

(lastValue._1 + thisValue._1, lastValue._2 + thisValue._2)

}

)

// 转换成原来的形式

val result: RDD[List[Any]] = reduced.map(x => List(x._1, x._2._1, x._2._2))

// 收集打印

result.collect().foreach(println)

/**

* List(a, 3, 7)

* List(b, 1, 1)

*/

}

}