174

社区成员

发帖

发帖 与我相关

与我相关 我的任务

我的任务 分享

分享| Which course does this assignment belong to | 2401_Mu_SE_FZU |

|---|---|

| Team Name | Smart Fishpond Access |

| Where is the requirement for this assignment | Fifth Assignment——Alpha Sprint |

| The goal of this assignment |

1. To execute project testing via specific modalities 2. To submit and collect all testing reports |

| Other reference |

W3C Web Accessibility Initiative (WAI) - UI Testing for Accessibility |

Content

1. Arrangement of Project Testing Work

2. Selection and Application of Testing Tools

There are 5 students in the Testing Group. The distribution of labor (Testing Part) is shown below.

| Mission | Time | Member | Distribution of Labor |

|---|---|---|---|

| Interface test | 11.28-11.30 | 832201306 | 20% |

| Stress test | 11.29 | 832201313 | 20% |

| UI test | 11.30 | 832201325 | 20% |

| Report Writing | 11.30 | 832201318 | 20% |

| Report Writing | 11.31 | 832201307 | 20% |

Specifically, the detailed distribution of test categories is shown below.

| Test categories | Time | Test content | Specification | Tester |

|---|---|---|---|---|

| Interface test | 11.28 | Login Page Interface Test | Login , Change, Register, Verify API | 832201306 |

| Interface test | 11.29 | Device Location Page Interface Test | getDeviceLocation, warnLog, newDevice, data, push API | 832201306 |

| Interface test | 11.30 | Intelligent Q&A Page Interface Test | getWord, getAnswer API | 832201306 |

| Page test | 11.29 | Test of All Functions on the Page | Test each clickable button on the page one by one | 832201325 |

| Stress test | 11.28 | Stress Test of Interfaces and Pages | Use tools to send requests to pages and interfaces and check their states |

832201313 |

| Test categories | Test tools |

|---|---|

| Interface test | Postman |

| Page test | Microsoft browser |

| Stressing test | Apache Benchmark, ApiPost |

Postman is a powerful API testing tool that is commonly used for developing and debugging RESTful APIs. It supports various HTTP request types, including GET, POST, PUT, and DELETE, enabling users to easily create and send requests while monitoring API behavior in real time. Due to its versatility and user-friendly interface, Postman is an excellent choice for addressing the interface testing needs of this project.

In this project, we use a functional testing approach for page testing, relying solely on a browser as the essential tool. This ensures comprehensive validation of page functionality and user interactions.

Apache Benchmark (ab) is a widely recognized command-line tool designed to simulate a specified number of concurrent requests. This allows for the assessment of server performance under various load conditions. Its capabilities make it an excellent choice for the page stress testing needs of this project. For API stress testing,</br> ApiPost is a popular tool that is tailored for debugging, testing, and automating API requests. It supports RESTful APIs, SOAP APIs, GraphQL, and other network interfaces. ApiPost also provides advanced features specifically designed to conduct stress tests, ensuring the reliability and performance of the project's APIs.

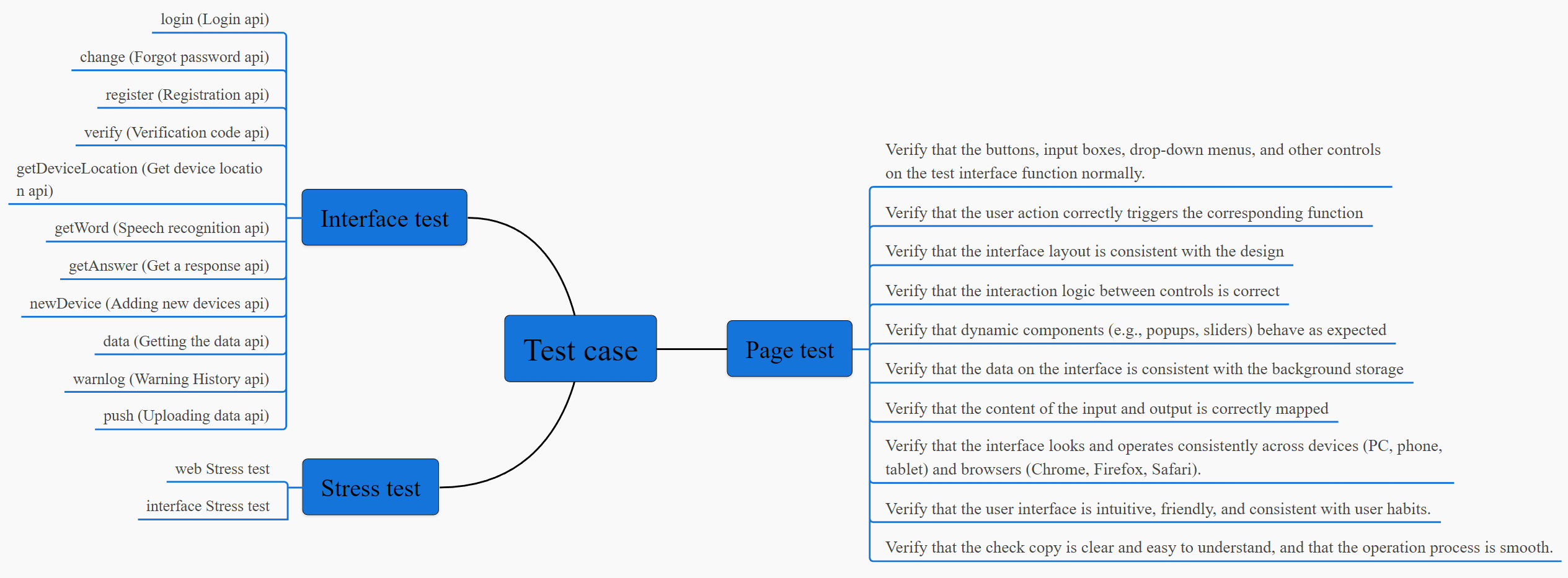

Fig.1 Test case diagram

As Fig. 1 shown,this project involved three key types of testing: interface testing, UI testing, and stress testing. The interface testing primarily focuses on verifying the functionality of the backend APIs connected to frontend actions, ensuring they respond accurately and perform as expected. In contrast, UI testing evaluates the effectiveness and usability of the frontend features of the application. Stress testing, conducted on both the pages and APIs, assesses their performance and stability under high-load conditions.

Before these tests, white-box testing was already conducted to verify the correctness of the code logic.

Debugging has been completed. All codes runs well as expected.

Additionally, the development team further refactored the core code by removing redundant elements, optimizing performance, and enhancing the overall efficiency and lightweight nature of the code.

Therefore, As a result, two main testing methodologies were employed in this project: functional testing for UI testing and black-box testing for interface and stress testing.

In this interface test, the project employs a black-box testing approach to evaluate the system's inputs and outputs without examining its internal workings. The functionality of the interfaces is verified by sending different types of HTTP requests using the Postman tool. The detailed process and results are documented below.

In this project, interface testing is conducted using a functional testing approach to verify the functionality and interactive behavior of the system's pages. By simulating user interactions, the tests evaluate the performance of interface elements and the consistency of page layouts, ensuring that the design is intuitive and user-friendly. This testing includes assessing the operation of controls such as buttons and input fields, as well as the behavior of interface logic and dynamic components. The detailed processes and results are summarized in the following document.

For the stress test, this project utilizes Apache Benchmark for network performance testing and ApiPost for interface stress testing, both adhering to the black-box testing methodology. The main process and results are outlined in the following document.

832201306: In this interface test, I primarily used Postman to evaluate the functionality of the APIs. Each test successfully sent requests and returned results as expected, with response times falling within an acceptable range. For instance, the response time for the newDevice interface was 159 ms, while the data interface had a response time of 88 ms—both of which were within the expected limits. I configured the request headers and bodies in accordance with the interface documentation, and the returned data matched my expectations. During testing, I encountered an issue where the data returned was incorrect due to improperly set request parameters. However, Postman provided detailed error logs and response information, which allowed me to quickly resolve the problem. Overall, this test provided a clear understanding of the interfaces' stability, performance, and accuracy.

832201325: The web interface test was conducted successfully. I reviewed each control on the page, which including buttons, input fields, and drop-down menus to confirm that users could interact with them easily and that the correct functions were triggered. The layout and design remained consistent across mobile phones, tablets, and PCs. Dynamic components like pop-ups and sliders functioned as expected, and the input data was accurately stored in the backend, ensuring data integrity. The interface design was intuitive, navigation was smooth, and the text was clear and easy to understand, leading to an overall positive user experience. In summary, the web interface test demonstrated stable functionality and received favorable feedback from users.

832201313: For the stress test, I utilized Apache Benchmark to evaluate the web pages and ApiPost to assess the APIs, with the goal of determining the system's performance under high traffic conditions. The web page stress test simulated 1,000 requests with 10 concurrent users. The results indicated that the system completed the test in 24.577 seconds, achieving an average response rate of 40.69 responses per second. Additionally, the data transfer rate met our expectations.Taking the get Device Location interface as an example, both the response time and error rate remained within acceptable limits. Overall, the system performed well under moderate load; however, if the user volume significantly increases, further optimizations may be necessary to maintain stability under higher concurrency.

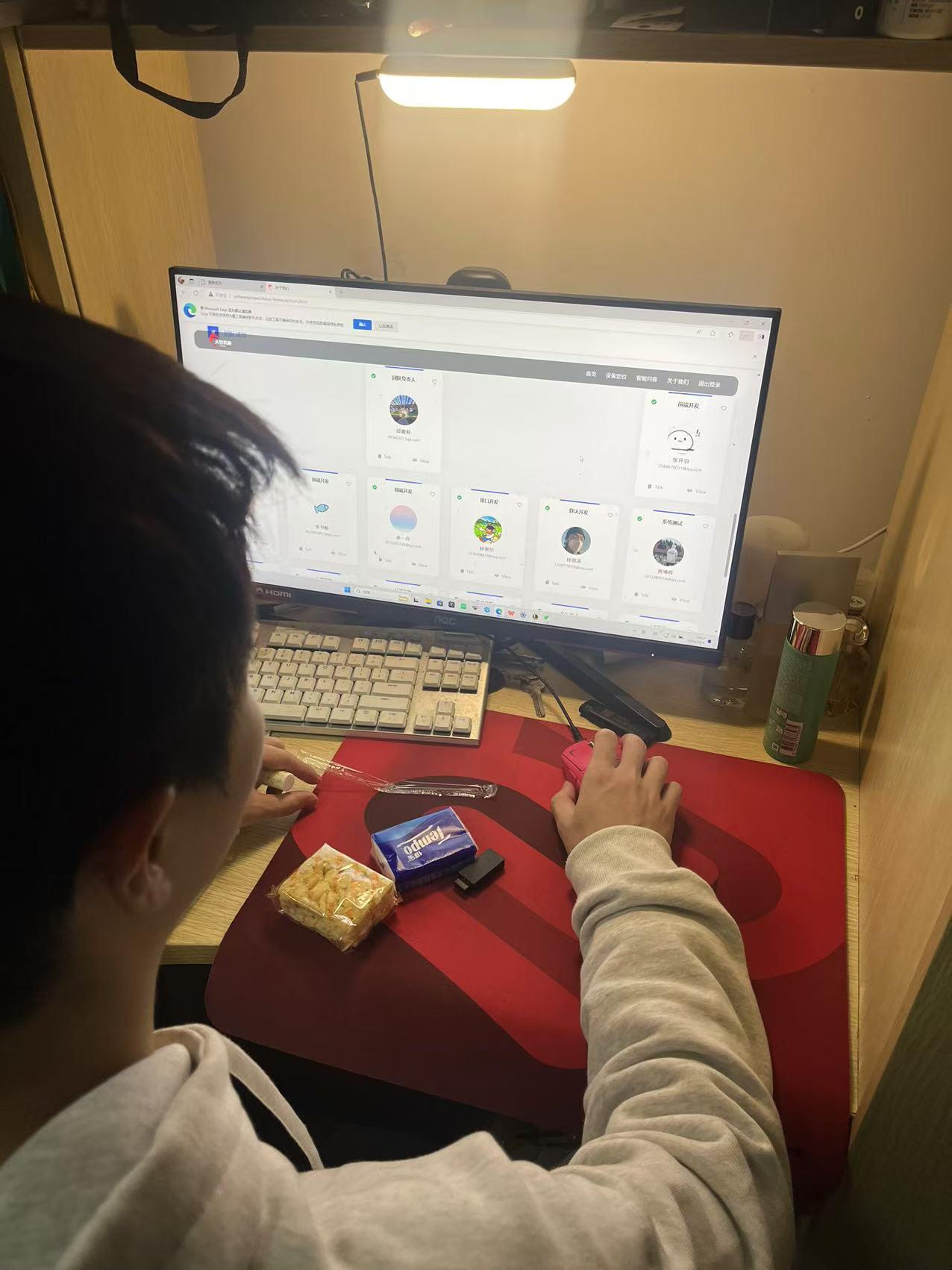

The photos of testing process is hsown below.

After comprehensive testing, we have thoroughly validated the stability, reliability, and performance of the cloud function interfaces, as well as the overall functionality of the system. The development team promptly identified and addressed potential issues, ensuring the smooth operation of the web page. The testing phase has been successfully completed, significantly enhancing our testing skills, fostering collaboration, and laying a solid foundation for the project's launch.

Looking ahead, we will continue to optimize the testing process during the beta (β) sprint phase by integrating advanced testing methods and tools to improve both efficiency and quality. We will implement a strategy that synchronizes testing and development throughout the software lifecycle, allowing for early detection and resolution of potential bugs. This approach will significantly reduce the cost of error correction in later stages. With real-time testing and continuous code review processes in place, we will quickly identify and resolve issues, enhancing the software's functionality and stability. Ultimately, this will provide users with an improved experience and support the ongoing, stable development of the project.